Written by Fernando Maciá

Index

How to efficiently analyze the indexing and crawlability of our website using Screaming Frog, Webmaster Tools (Aka Search Console) and Excel. We will work on how, from the initial audit, to prepare a complex migration and the constant monitoring of a site. Iñaki Huerta, Optimization Marketing Consultant at IKAUE.

We use ScreamingFrog because it is a fully SEO oriented tool. Also, because that way of crawling resembles how Google’s crawler works. The basic way to find new content is similar: through links. Clearly Google is much more powerful. But it helps us to see how it could index with links alone. We understand better what a robot sees on our website.

Another advantage: it is very cheap. And it even has a free version, albeit limited to 500 URLs. In list mode it does track without limit.

Finally, it is constantly evolving and trying to adapt. Integrates with Google Search Console.

ScreamingFrog extracts a lot of information in the form of tables. It does not have a great usability, it is made by technicians, but it is really very effective. Generates a report of internal and external URLs, with all outgoing links.

Caution: sometimes ScreamingFrog may display information in an unexpected way. For example, mixing http response codes from your own URLs and from external links.

And what do I do with the frog?

Three phases:

- Tracking

- Collection of criteria

- Analysis

ScremingFrog is very good on the first two points, not so good on the third because of the volume of data it handles. It costs a lot to work globally on the site.

You don’t go into the tool to look, you go in to answer specific questions. Otherwise, we lose all our time.

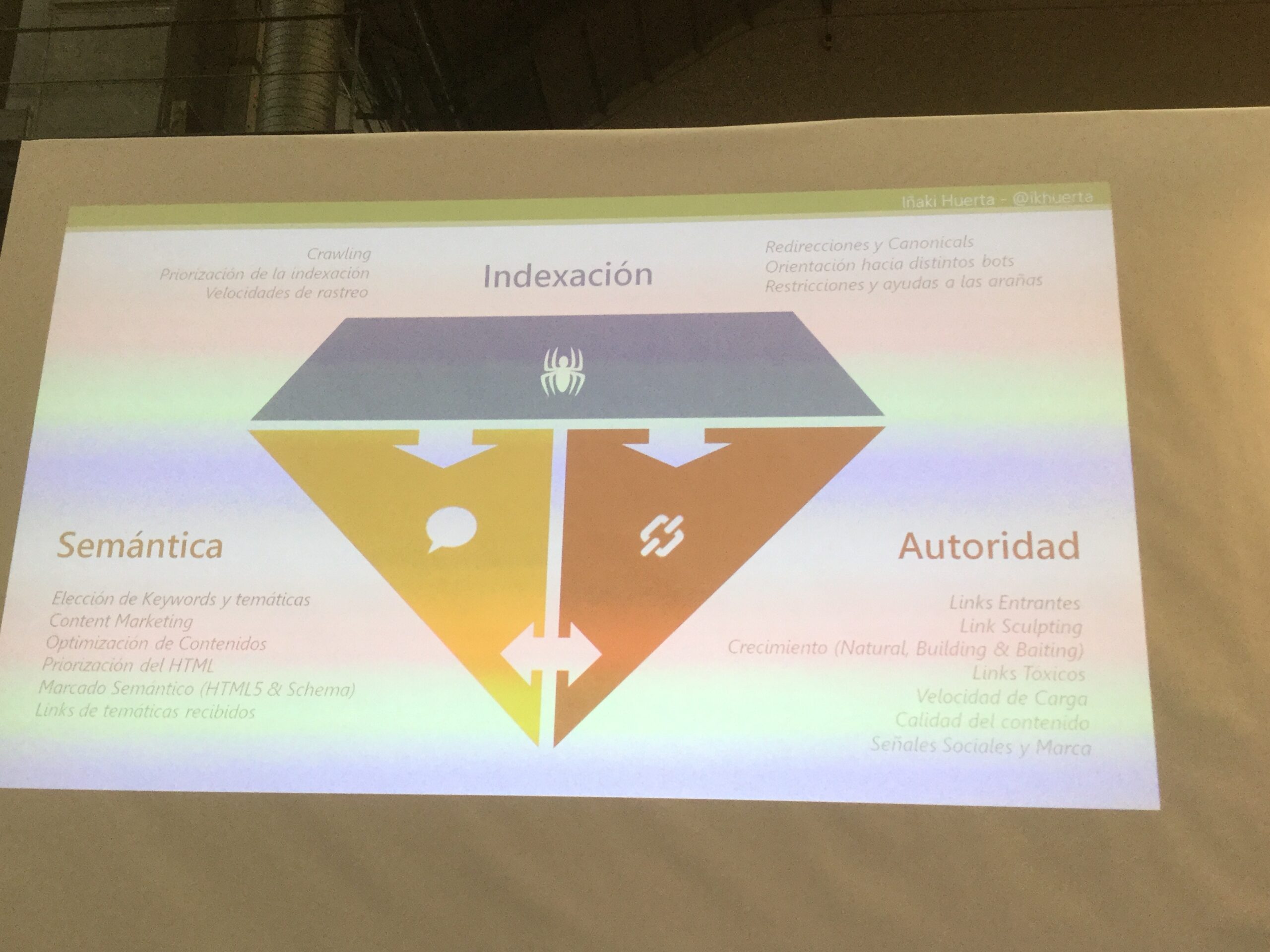

Indexing is the basic pillar. Next comes semantics and authority. Screaming helps us especially in indexing analysis.

It also helps us in the other two, because it helps us to audit the internal semantics of the contents and tells us about internal authority: internal links to each page.

With that outline, I have specific questions that ScreamingFrog helps me answer:

- Basic technical analysis

- Onpage audit

- Structure analysis

- Migration control

Technical analysis

The geeky side of SEO: technical SEO audits. We launch ScreamingFrog, go for coffee and the tool extracts the basic information.

File typology: loading problems, file errors, etc. Allows the identification of basic problems.

Encoding problems: UTF-8, ISO… A correct Web must use the same character set throughout the Web.

Server response codes: 200 OK, redirect 301, errors 404…. It allows to optimize the crawling time of the spiders. There should be NO internal links to redirects.

Unwanted URLs: this must be done manually. URLs that don’t have the expected form because they messed up the HTML. Tricks: search for “%”, search your domain twice, HTML leftovers… These are things that happen, and they can go unnoticed.

All of this is the technicians’ screw-ups.

From there, objectives: validate the orientation of the website towards the desired positioning.

We analyze main tags, indexing guidelines, metadata, duplicity, actual indexing of URLs, most important pages, conversion… All this synchronizing with Google.

- Main tags collected: title, description, headings… Also remove the keywords field that is no longer used.

- Indexing directives that it collects: meta robots, canonical, next/prev… Which can cause some serious problems when not configured correctly. Beware of canonical screw-ups. Screaming reads the robots.txt file and we can configure it to respect it.

- Analysis of titles (or other tags): allows you to analyze duplicate titles, missing elements (description, for example), titles that are too long or too short, etc. However, for tag tracking there are other better tools: SEMRush, Sistrix, OnPage.org, etc. But it is very useful to make the previous analysis.

- Links: allows you to identify the most important pages of my site by number of incoming links, by your own architecture. These are the ones we have to take care of the most, because we are concentrating more authority on them.

Extractions of elements

In addition, we can configure it to extract any element that specifically interests me (Custom Extraction), which is extremely interesting.

We can also extract customized label tables. It leaves some very useful sample extractions.

DataLayer: very powerful for Web analysts.

The problem: ScreamingFrog goes down to the detail of everything.

It’s easy to get lost in isolated issues, instead of focusing on the core problems of the site. What’s the solution: Excel. We export and bring everything into Excel.

We export the internal and external and we can analyze with pivot tables and Excel and set up our Web site indexing dashboard.

Quality of URLs

Your job: tracking data.

Search engine analysis: how Google perceives it.

What it brings to your business: positioning results.

ScreamingFrog allows you to integrate Google Search Console by giving it authorization from your Google account and from there, Search Console data is imported and added to each URL. It is very useful to see how Google perceives this indexing.

Google Analytics: authorization is granted in the same way but when importing data it is somewhat more complex. It is necessary to define what data I want. By default, SF extracts the basic ones. But if you have Commerce set up in Analytics, Screaming Frog allows you to add all that data to each URL.

We add the Analytics data and we have them integrated in each URL. It is very interesting. This way we can better prioritize the work.

Structure and crawl budget analysis

Google allocates a processing time to each site, which is shown in Crawl Statistics in Google Search Console. Since not all of our content is equally important for SEO, it is important to prevent Google from wasting time on pages that do not add value. If we optimize this process, Google will improve the indexing of interesting content.

We can use:

- WPO

- Link Sculpting

- Next/Prev

- Robots.txt

- Meta robots

- Canonicals

- Etc.

This way we prioritize the indexing of different contents to concentrate my positioning on certain URLs. We give weight to one or the other. I have to adapt the information architecture to my content tree.

We see them in Screaming Frog’s Site Structure: pages linking to a page and URL level (distance in clicks from home). All of this tells me what priority I assign to a particular page.

I can also extract the crawl path of a particular page.

Migrations on a Web

Web migrations are always a sensitive time because you can take a toll on web traffic. Screaming allows you to control the process much more and check that everything went well.

We start from the audit of the new site and, in addition, we can validate the links of the old page with respect to the new one.

We analyze all this in an Excel macro, put Screming in list mode and check all 301s.