Written by Fernando Maciá

Index

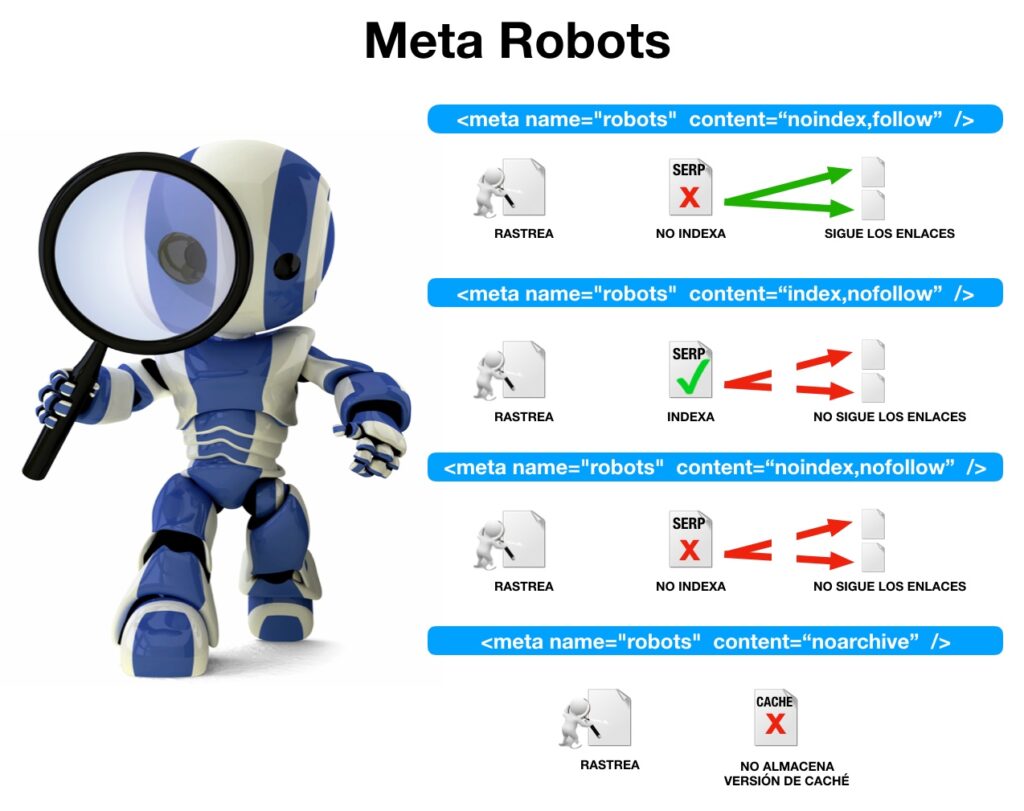

The meta robots is an HTML meta tag that serves to give instructions to search engine spiders regarding the crawling and indexing of the web page where it is implemented. With the meta robots we give guidelines to index or not to index a page, or to follow or not to follow links to other pages, preventing them from being crawled.

What is it used for?

Meta robots control how a page is indexed and how it is displayed to users on the search results page.

Its usual use is applied to indicate negative directives, since the tracker understands them as positive if not otherwise indicated. By default, they are already interpreted as positive.

It is obvious, but we should not specify any robots meta tags in case we want search engines to index the page. That is why we must be very careful not to include or remove them in those portals that use them in tests and that are going to launch the definitive version they want to index, so that the crawling is carried out without problems.

Where and how to implement the robots meta tag

The placement of the meta robots should be done within the <head> section of the page in question.

In addition, it needs to contain two attributes, name and content in order to function correctly.

Example of meta robots implementation:

<!DOCTYPE html>

<html>

<head>

...

<title>...</title>

<meta name="robots" content="noindex" />

...

</head>

<body>

...

</body>

</html>

Attribute content: Directives

The values that can accompany the robots meta tag in order to give different indications regarding the indexing or publication of the page, can be several and are indicated within the “content” attribute.

We can give as many guidelines as we deem appropriate as long as they are separated by commas when they are included.

Main directives of the meta robots:

- index / noindex: with which we will indicate to the crawlers if we want to index or not the web page in their search engines so that it appears or not in their search results. If we don’t want to index it, with noindex we will tell it not to show this page in its results.

- follow / nofollow: indicates to the spider whether to crawl and follow, or not, the links contained in the page in question.

- archive / noarchive, indicates whether or not we want the robot to be able to store the content of the web page in the internal cache of the browser.

- snippet / nosnippet: to show only the title and not the description in the search results.

- odp / noodp: when we do not want the search engines to extract metadata from the Open Project Directory, in the titles or descriptions of the page that they show in the results. (In general, it is understood that this directive is obsolete due to the fact that the Open Directory Project has fallen into disuse).

- ydir / noydir, similar to the previous one, but for the Yahoo! directory. (It is generally understood that this directive is obsolete because the Yahoo! Directory has fallen into disuse).

- translate / notranslate: to offer or not the translation of the page in the search results.

- noimageindex: not to index the images of the page.

- unavailable_after [RFC-850 date/time]: when we do not want the page to be displayed in the search results after the specified date and time.

Examples of the use of meta robots and interpretation of the directives

We cite some examples of meta robots and the meaning of what we want to indicate to search engine crawlers with it:

<meta name="robots" content="index,follow" />

It is the default tag of any web page and it is not necessary to include it. Tells the crawler to crawl, index and follow the links contained in the web page. It is unnecessary because this is the default behavior of any robot.

<meta name="robots" content="index,nofollow" />

We want the page to be indexed but we do not want the links contained in the page to be followed. It is usually included when we do not want the linked pages to be indexed either because they are blocked by a robots meta tag with the “noindex” directive or because they are blocked with a “disallow” instruction from the robots.txt file.

<meta name="robots" content="noindex,follow" />

In this case, we do not want the page that includes the meta to be indexed but we do want the robot to discover and crawl the pages linked from it. It is usually included in paginated results when the number of pages in the series is very large.

<meta name="robots" content="nofollow,noodp" />

When we do not want the links it contains to be crawled and we do not want to show the opd metadata in the page titles or descriptions.

Meta robots vs. robots.txt

Both the meta robots and the directives specified in the robots.txt file of our website can give us some control over which pages should be indexed and/or crawled and which should not. Although apparently they are two methods aimed at achieving the same objective, opting for one or the other at the SEO level will depend on the scenario and the specific objective pursued.

To understand the differences, it is important to distinguish between crawling and indexing. In crawling, search engines access content and “read” the information contained on a page. Subsequently, the search engine decides to index that content in one search category or another, or not to index it at all. If the search engine does not crawl a page, we save crawl time and optimize the crawl-rate, leaving more time for the robot to crawl the most important or current content. If, on the other hand, the robot crawls a piece of content but then does not index it, that content will not appear in the results either, but we will have wasted precious crawl-rate time that perhaps we could have made better use of.

Differences between meta robots and robots.txt at the crawling level

When accessing a new website, search engine robots try to locate the robots.txt file to query the directives specified therein. If a URL conforms to any of the patterns specified as “disallowed” in the robots.txt file, the robot will simply ignore that URL and will not bother to index it. This means that Google will not have to spend time crawling content that we don’t want it to index.

If, on the other hand, the Google robot finds the robots meta tag in a URL, this means that it has had to access and crawl that page. If we specify in the robots meta tag that we do not want the page to be indexed with the “noindex” directive, Google will not show this content in the results either.

Apparently, we have achieved the same goal: to prevent Google from displaying a certain URL in its results. But Google’s use of resources is different. With the robots.txt file, you did not have to access the URL and crawl its content, while with the robots meta tag you did have to spend some crawling time to do so.

On the other hand, a robots.txt file gives us a lot of flexibility in defining URL patterns that we don’t want Google to crawl, while a robots meta controls URL-to-URL indexing.

Differences between meta robots and robots.txt at the indexing level

Although a “disallow” directive in the robots.txt file will prevent Google from accessing a particular URL from a link in our own Web, it is still possible for Google to “discover” and crawl such a URL if it finds a link pointing to it from a different website, i.e. an external link. This is the reason why we can discover URLs for which Google does not show any description when searching with site:domain.com.

Conversely, a robots metatag with the “noindex” directive will cause Google to stop displaying that URL in its results altogether. Google will crawl the URL but will not add it to its index (obeying this “noindex” instruction).

Conclusion

If we prohibit the crawling of a URL in the robots.txt file, search engines will not invest time in crawling content unless they discover it from an external link, so we can optimize the time that the robot spends crawling our content, but is not as effective if we do not want a page to be displayed in the results under any circumstances.

If we prohibit the indexing of a URL from the robots meta tag, Google will not index that URL and will never show it in its results, but we will have wasted precious crawling time for the spider.

Other ways to control the indexing of a specific URL

In addition to the robots metatag and the robots.txt file, there are other ways to control the indexing of pages on a website. For example:

Link element “canonical”

When we specify a “canonical” link element, we are suggesting to the search engine which URL we want it to index that content with. This URL can be exactly the same as the one used to access the content (canonical self-referential) or canonical page, or it can be different, in which case we would speak of a canonicalized URL. In the latter case, we can tell Google not to index different URLs for content that could be detected as duplicate, such as, for example, lists of content ordered under different criteria, variants of the same product in different colors or sizes, etc.

Although it should be noted that for search engines, a “canonical” is only a suggestion. If the search engine finds a significant number of internal or external links to a given URL it will probably index it even if it is canonicalized to a different URL (i.e., even if it includes a “canonical” link element pointing to another page).

Parameter management in Google Search Console

Another way to control the indexing or not of certain URLs can be found in the Parameter Management section of the Google Search Console tool. With it we can specify which parameters should be indexed in a URL (e.g. product ID numbers) and which should not (e.g. campaign tracking or content reordering parameters).

Conclusion

Both the “canonical” link element and parameter management in Google Search Console are methods to avoid indexing URLs that would lead to a potential duplicate content problem. Whenever possible, it is advisable to be consistent in the definition of canonical URLs and the specification made from Google Search Console parameter management because although this functionality is focused on avoiding the indexing of duplicate content, it is important to understand the different effects we achieve with each of the indexing management methods to apply the appropriate one in our specific scenario.