Written by Ramón Saquete

Index

- Actions to optimize the download and compilation of JavaScript code

- Actions to optimize JavaScript code execution

- Avoid using too much memory

- Prevent memory leaks

- Use web workers when you need to execute code that requires a long execution time.

- Using the Fetch API (AJAX)

- Prioritizes access to local variables

- If you access a DOM element several times save it in a local variable

- Clustering and minimizing DOM and CSSOM reads and writes

- Use the requestAnimationFrame(callback) function in animations and scroll-dependent effects.

- If there are many similar events, group them together

- Beware of events that are triggered several times in a row

- Avoid string execution with code with eval(), Function(), setTimeout() and setInterval()

- Implements optimizations that you would apply in any other programming language

- Tools to detect problems

- Final recommendations

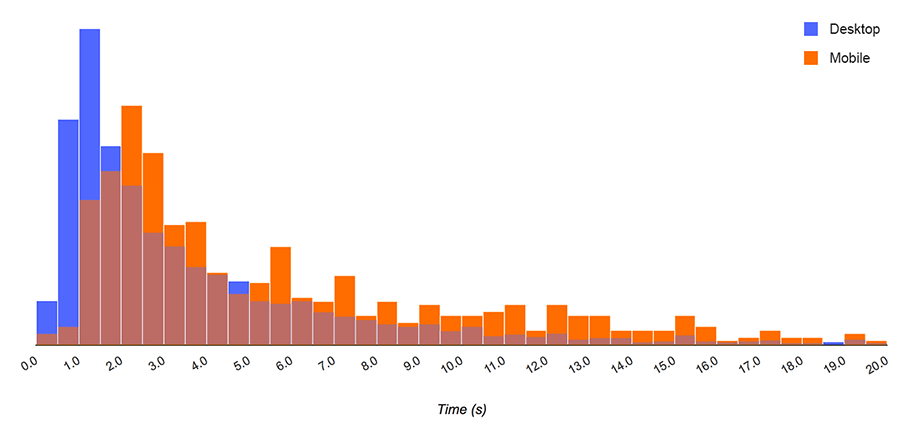

With the shift of users from desktop to mobile devices, client-side optimization of web pages is becoming more important than in the past, matching the importance of server-side optimization. Making a website work fast on devices that are slower and with connections that, depending on the coverage, can be bad or even suffer outages, is not an easy task, but it is necessary, because having higher speed will mean more satisfied users who will visit us more often and better positioning in mobile searches.

In this article I will discuss some of the points to pay more attention to when optimizing JavaScript running on the client. Firstly, we will see how to optimize its download and compilation, and secondly, how to optimize its execution so that the page has a good performance. Understanding by performance, the definition given by Google’s RAIL model. These acronyms stand for:

- Interface response in less than 100ms.

- Full draw animations every 16ms which is 60 FPS or 60 frames per second.

- Disabled: when the user does not interact with the page what is executed in the background should not last more than 50ms.

- Load: the page must load in 1000ms.

These times should be achieved in the worst case (when running the web on an old cell phone), with low processor and memory resources.

In the following I explain, firstly, the actions necessary to optimize the download and compilation and, secondly, the actions necessary to optimize the execution of the code, being this part more technical, but not less important.

Actions to optimize the download and compilation of JavaScript code

Browser caching

Here we have two options. The first is to use the JavaScript Cache API, which we can make use of by installing a service worker. The second is to use the HTTP protocol cache. If we use the Cache API our application could have the option to run in offline mode. If we use the HTTP protocol cache, roughly speaking, we must configure it using the Cache-control parameter with the values public and max-age, with a large cache time, such as one year. Then, if we want to invalidate this cache we will rename the file.

Compressing with Brotli q11 and Gzip

By compressing the JavaScript code, we are reducing the bits that are transmitted over the network and, therefore, the transmission time, but it must be taken into account that we are increasing processing time on both the server and client sideThe first one must compress the file and the second one must decompress it. We can save the first time if we have a cache of compressed files on the server, but the decompression time on the client plus the compressed transmission time may be longer than the transmission time of the decompressed file, making this technique slow down the download. This will occur only with very small files and at high transmission speeds. We cannot know the user’s transmission speed, but we can tell our server not to compress very small files, for example, tell it not to compress files smaller than 280 bytes. In connections with high speeds, above 100Mb/s, this value should be much higher, but it is optimized for those who have mobile connections with poor coverage, where the loss of performance is more pronounced, although in fast connections it goes a little slower.

The new Brotli compression algorithm improves compression over Gzip by 17%. If the browser sends, in the HTTP protocol header, the value “br” in the accept-encoding parameter, this means that the server can send you the file in Brotli format instead of Gzip.

Minimize

It consists of using an automatic tool to remove comments, spaces, tabs and substitute variables to make the code take up less space. Minimized files should be cached on the server or generated already minimized at the time of upload, because if the server has to minimize them with every request, it will have a negative impact on performance.

Unify JavaScript code

This is an optimization technique that is not very important if our website works with HTTPS and HTTP2, since this protocol sends the files as if they were one, but if our website works with HTTP1.1 or we expect to have many customers with older browsers using this protocol, unification is necessary to optimize the download. But do not go overboard and unify all the code of the web in a single file, because if you send only the code that the user needs on each page, you can reduce the bytes to be downloaded considerably. For this purpose We will separate the code base that is necessary for the entire portal from what will be executed on each individual page. In this way we will have two JavaScript files for each page, one with the basic libraries that will be common for all pages and another with the specific code of the page. With a tool like webpack we can unify and minimize these two groups of files in our development project. Make sure that the tool you use for this purpose generates the so-called “.source maps“. These are .map files that are associated in the header of the final file and in which the relationship between the minimized and unified code and the real source code files is established. This way we can later debug the code without problems.

The option to unify everything in a single larger file has the advantage that you can cache all the JavaScript code of the website in the browser on the first visit and, on the next load, the user will not have to download all the JavaScript code. So I recommend this option only if the byte savings are practically negligible compared to the previous technique and we have a low bounce rate.

Mark JavaScript as asynchronous

We must include the JavaScript as follows:

<script async src="/codigo.js" />

In this way we are preventing the appearance of the script tag from blocking the DOM construction stage of the page.

Do not use JavaScript embedded in the page

Using the script tag to embed code in the page also blocks the construction of the DOM and even more so if the document.write() function is used. In other words, this is prohibited:

<script>documente.write(“Hello world!”);</script>

Load JavaScript in the page header with async

Before the async tag was available, it was recommended to put all script tags at the end of the page to avoid blocking the construction of the page. This is no longer necessary, in fact it is better if it is at the top inside the tag <head>, so that the JavaScript starts downloading, parsing and compiling as soon as possible, since these phases are the ones that will take the longest. If this attribute is not used, the JavaScript must be at the end.

Remove unused JavaScript

At this point we are not only reducing the transmission time, but also the time it takes for the browser to parse and compile the code. To do so, the following points must be taken into account:

- If it is detected that a feature is not being used by users, we can remove it and all its associated JavaScript code, so that the web will load faster and users will appreciate it.

- It is also possible that we have included by mistake some library that is not necessary or that we have libraries that offer some functionality that we already have natively in all browsers, without the need to use additional code and in a faster way.

- Finally, if we want to optimize to the extreme, no matter how long it takes, we should remove the code we are not using from the libraries. But I do not recommend it, because we never know when we might need it again.

Defer loading of JavaScript that is not required:

This should be done with those functionalities that are not necessary for the initial drawing of the page. These are functionalities for which the user must perform a certain action in order to execute it. That way we avoid loading and compiling JavaScript code that would delay the initial display. Once the page is fully loaded, we can start loading those functionalities so that they are immediately available when the user starts interacting. Google in the RAIL model recommends that this deferred loading be done in 50ms blocks so that it does not influence the user’s interaction with the page. If the user interacts with a feature that has not yet been loaded, we must load it at that time.

Actions to optimize JavaScript code execution

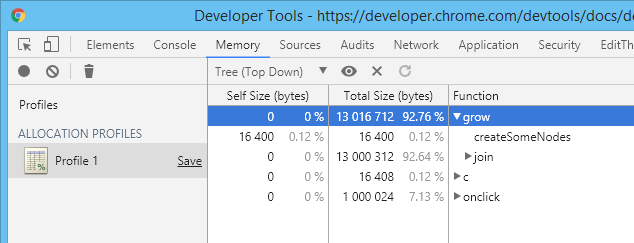

Avoid using too much memory

We can’t say how much memory will be too much, but we can say that we should always try not to use more than necessary, because we don’t know how much memory the device that will run the web will have. When the browser’s garbage collector is executed, JavaScript execution is stopped, and this happens every time our code asks the browser to reserve new memory. If this happens frequently, the page will be slow.

In the “Memory” tab of Chrome’s developer tools, we can see the memory occupied by each JavaScript function:

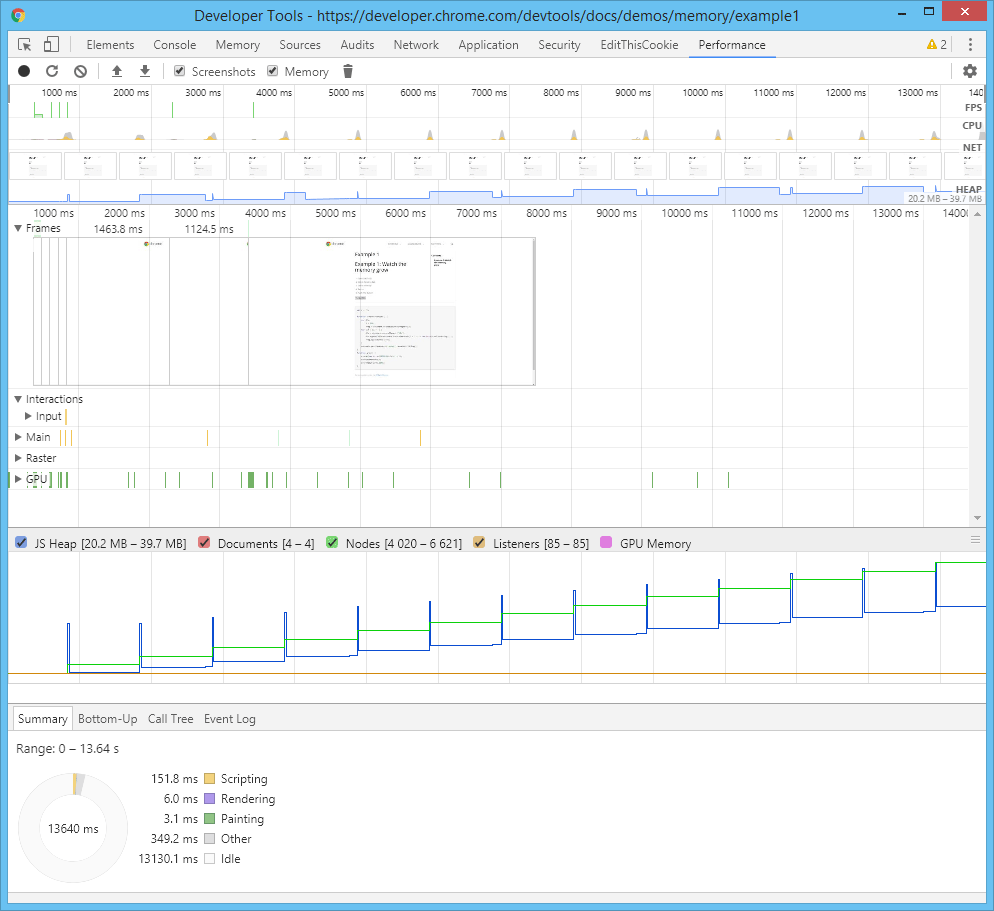

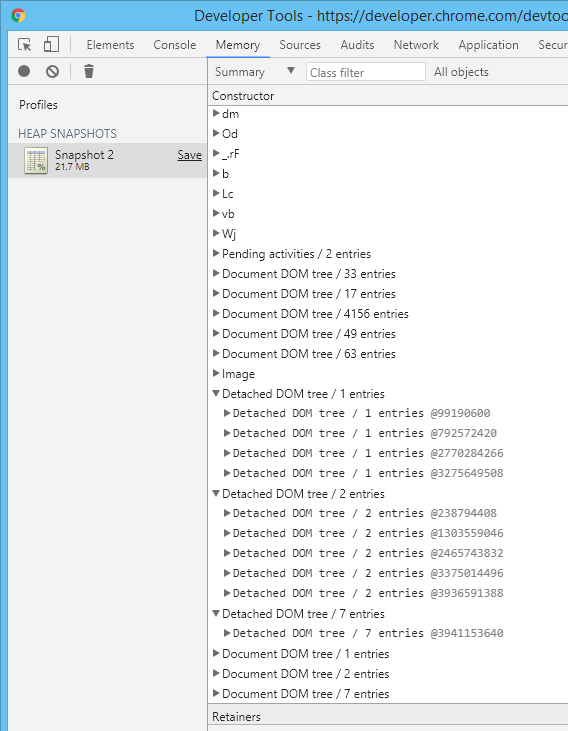

Prevent memory leaks

If we have a memory leak in a loop, the page will reserve more and more memory, occupying all the available memory of the device and making everything slower and slower. This bug usually occurs in carousels and image sliders.

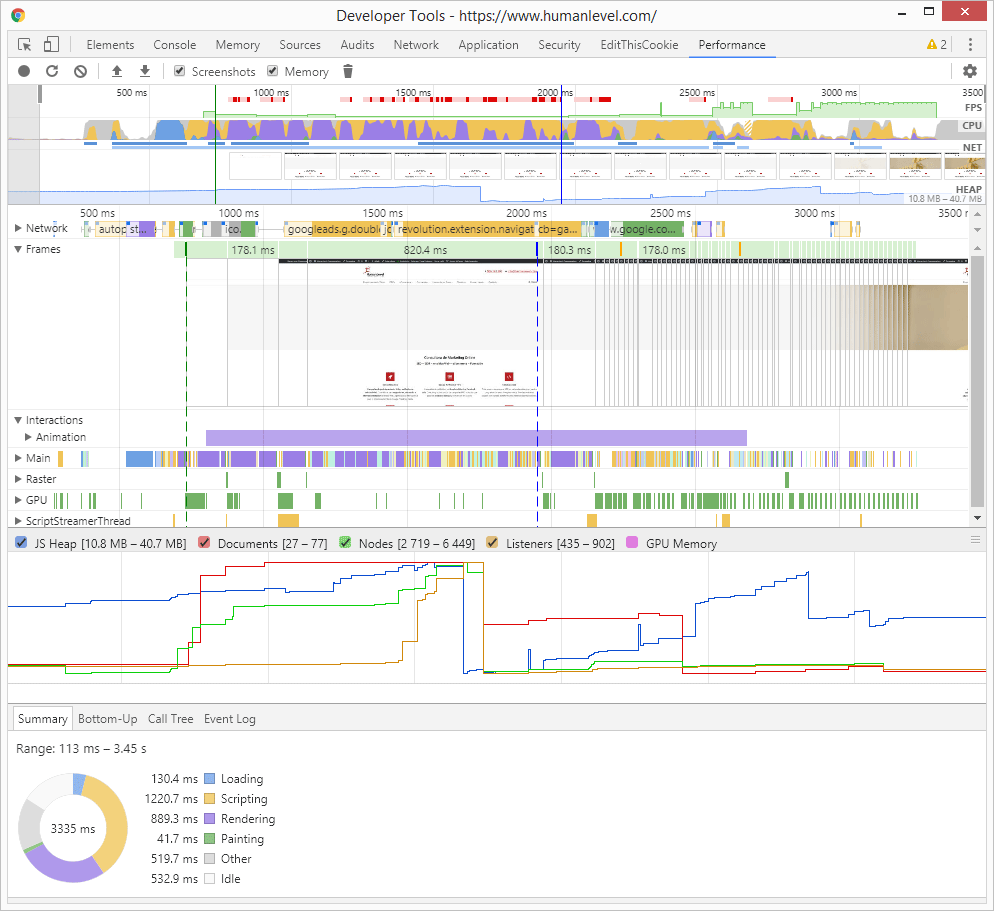

In Chrome we can analyze if our website is leaking memory by recording a timeline in the performance tab of the developer tools:

Memory leaks are usually caused by chunks of the DOM that are removed from the page but have some variable that refers to them and, therefore, the garbage collector cannot remove them and therefore the not understanding how variable scoping and closures work in JavaScript.

Use web workers when you need to execute code that requires a long execution time.

All processors nowadays are multithreaded and multicore but JavaScript has traditionally been a single-threaded language and, although it has timers, these are executed in the same thread of execution, in which, in addition, the interaction with the interface is executed, so while executing JavaScript the interface crashes and if it takes longer than 50ms it will be noticeable. Web workers and service workers bring multithreaded execution to JavaScript, although they do not allow direct access to the DOM, so we will have to think about how to delay access to it, in order to apply web workers in cases where we have code that takes more than 50ms to execute.

Using the Fetch API (AJAX)

The use of the Fetch API or AJAX is also a good way for the user to perceive a faster loading time, but we should not use it in the initial loading, but in the subsequent navigation and in a way that is indexable. The best way to implement it is to make use of a framework that uses Universal JavaScript.

Prioritizes access to local variables

JavaScript first looks to see if the variable exists locally and continues to look for it in higher scopes, the latter being global variables. JavaScript accesses local variables faster because it does not have to look up the variable in higher environments to find it, so this is a good strategy store in local variables those variables of a higher scope that we are going to access several times and, in addition, do not create new scopes with closures or with the with and try catch statements, without it being necessary.

If you access a DOM element several times save it in a local variable

DOM access is slow. So if we are going to read the content of an element several times, better save it in a local variable, so the JavaScript won’t have to look for the element in the DOM every time you want to access its content. But pay attention, if you save in a variable a piece of the DOM that you are going to remove from the page and you are not going to use it anymore, try to assign to “null” the variable where you have saved it in order not to cause a memory leak.

Clustering and minimizing DOM and CSSOM reads and writes

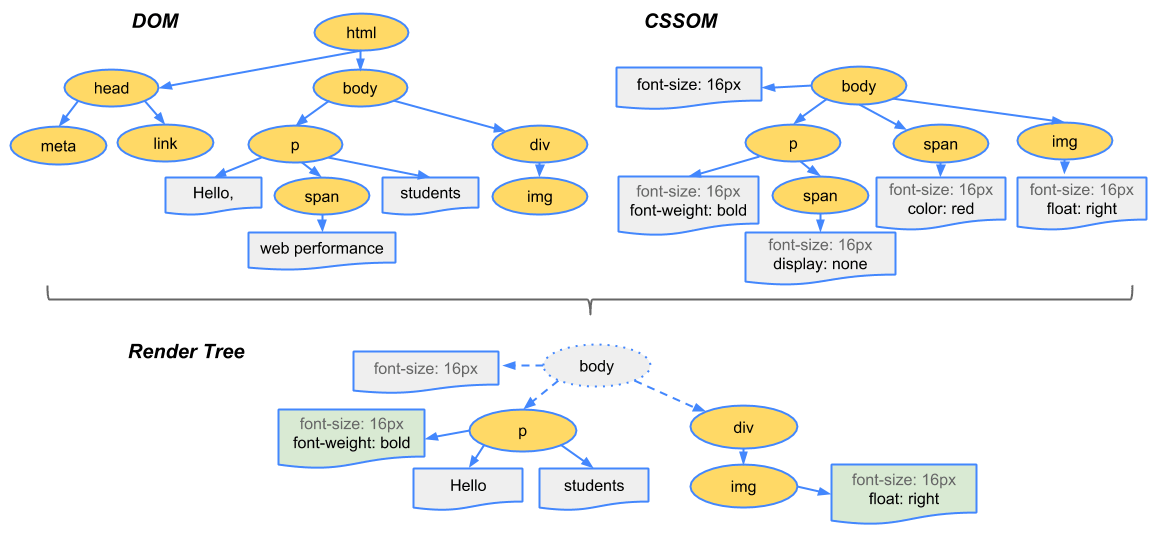

When the browser draws a page it goes through the critical rendering path which follows the following steps on first load:

- HTML is received.

- Start building the DOM.

- While the DOM is being built, external resources (CSS and JS) are requested.

- The CCSOM (mixture of DOM and CSS) is built.

- The representation tree (the parts of the CSSOM to be drawn) is created.

- The geometry of each visible part of the tree in a layer is calculated from the representation tree. This stage is called layout or reflow.

- In the final painting stage, the layers from step 6 are painted, processed and composited on top of each other to display the page to the user.

- If the JavaScript has finished compiling, it is executed (actually, this step could occur at any point after step 3, the sooner the better).

- If in the previous step the JavaScript code forces to redo part of the DOM or the CSSOM we go back several steps that will be executed until point 7.

Although browsers queue changes to the rendering tree and decide when to repaint, if we have a loop in which we read the DOM and/or CSSOM and modify it on the next line, the browser may be forced to reflow or repaint the page several times.especially if the next reading depends on the previous writing. This is why it is recommended:

- Separate all the reads in a separate loop and do all the writes in one go with the cssText property if it is the CSSOM or innerHTML if it is the DOM, so the browser will only have to launch a repaint.

- If reads depend on previous writes, find a way to rewrite the algorithm so that this is not the case.

- If you have no choice but to apply a lot of changes to a DOM element, take it out of the DOM, make the changes and put it back in where it was.

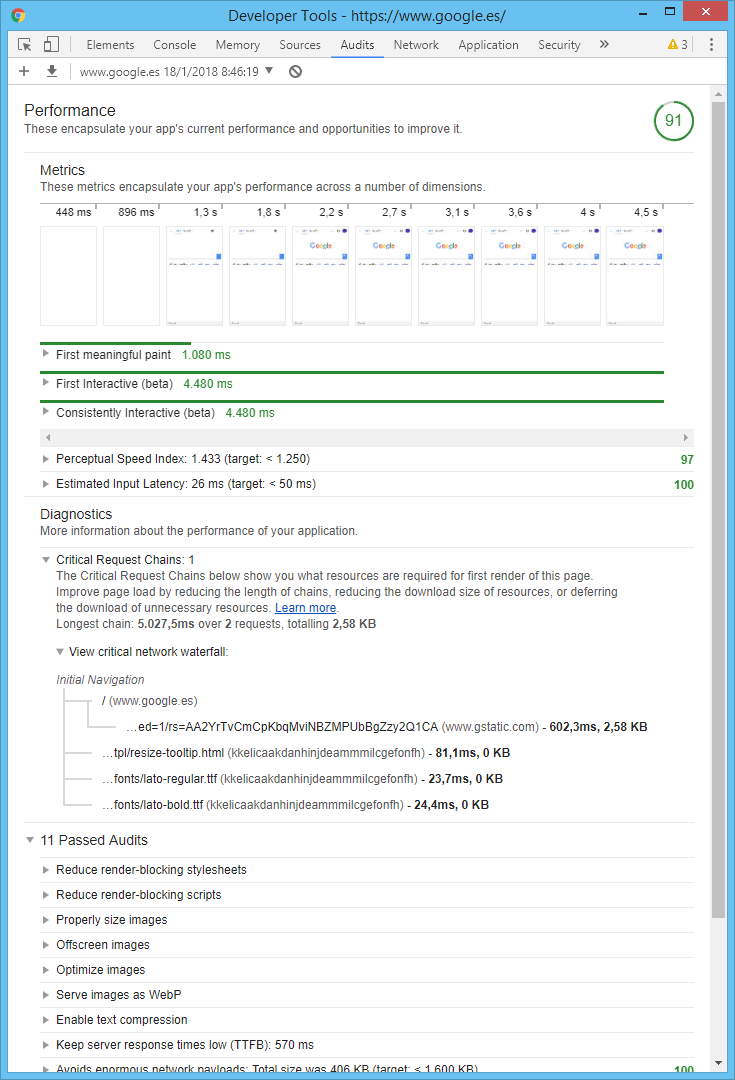

- In Google Chrome, we can analyze what happens in the critical rendering path with the Lighthouse tool in the “Audits” tab or in the “Performance” tab by recording what happens while the page is loading.

Use the requestAnimationFrame(callback) function in animations and scroll-dependent effects.

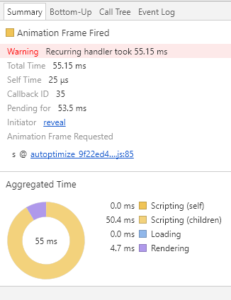

The function requestAnimationFrame(), makes the function that is passed as a parameter does not cause a repaint, until the next scheduled. This, in addition to avoiding unnecessary repaints, has the effect that the animations stop while the user is in another tab, saving CPU and device battery.

Scroll-dependent effects are the slowest because the following DOM properties force a reflow (step 7 of the previous point) when accessing them:

offsetTop, offsetLeft, offsetWidth, offsetHeight scrollTop, scrollLeft, scrollWidth, scrollHeight clientTop, clientLeft, clientWidth, clientHeight getComputedStyle() (currentStyle en IE)

If in addition to accessing one of these properties, then based on them we can paint a banner or menu that follow you when scrolling or a parallax scroll effectwill be made the repainting of several layers each time the scroll is movedThis negatively affects the response time of the interface, so that we can have a scroll that jumps instead of sliding smoothly. Therefore, with these effects, you should save in a global variable the last position of the scroll in the onscroll event, and then use the requestAnimationFrame() function only if the previous animation has finished.

If there are many similar events, group them together

If you have 300 buttons that when clicked do pretty much the same thing, we can assign one event to the parent element of the 300 buttons instead of assigning 300 events to each of them. When a button is clicked, the event “bubbles” up to the parent and from the parent we can know which button the user clicked and modify the behavior accordingly.

Beware of events that are triggered several times in a row

Events such as onmousemove or onscroll are fired several times in a row while the action is being performed. So make sure that the associated code is not executed more times than necessary, as this is a very common error.

Avoid string execution with code with eval(), Function(), setTimeout() and setInterval()

Entering code in a literal to be parsed and compiled during the execution of the rest of the code is quite slow, for example: eval(“c = a + b”);. You can always redo the programming to avoid having to do this.

Implements optimizations that you would apply in any other programming language

- Always use the algorithms with the lowest computational complexity or cyclomatic complexity for the task to be solved.

- Use the optimal data structures to achieve the above point.

- Rewrite the algorithm to obtain the same result with fewer calculations.

- Avoid recursive calls by changing the algorithm to an equivalent one that makes use of a stack.

- Make a function with a high cost and repeated calls over several code blocks store the result in memory for the next call.

- Put calculations and repeated function calls in variables.

- When traversing a loop, store the loop size in a variable first, to avoid recalculating it, at its end condition, at each iteration.

- Factor and simplify mathematical formulas.

- Replace calculations that do not depend on variables with constants and leave the calculation commented out.

- Use search arrays: they are used to obtain a value based on another value instead of using a switch block.

- Make the conditions always more likely to be true to take better advantage of the speculative execution of the processor, since this way the jump prediction will fail less.

- Simplify Boolean expressions with the rules of Boolean logic or better yet with Karnaugh maps.

- Use bitwise operators when you can use them to replace certain operations, since these operators use fewer processor cycles. Using them requires knowledge of binary arithmetic, e.g.: x being a value of an integer variable, we can put “y=x>>1;” instead of “y=x/2;” or “y=x&0xFF;” instead of “y=x%256”.

These are some of my favorites, the first three being the most important and the ones that require the most study and practice. The latter are micro-optimizations that are only worthwhile if you perform them while writing the code or if it’s something computationally very expensive like a video editor or a video game, although in those cases you’d be better off using WebAssembly instead of JavaScript.

Tools to detect problems

We have already seen several. Of all of them, Lighthouse is the easiest to interpret, since it simply gives us a series of points to improve, as the Google PageSpeed Insights tool or many others, such as GTmetrix, can also give us. In Chrome we can also use, in the “More tools” option of the main menu, the task manager, to see the memory and CPU used by each tab. For even more technical analysis, we have the Firefox and Google Chrome developer tools, where we have a tab called “Performance” that allows us to analyze quite well the times of each phase, memory leaks, etc. Let’s look at an example:

All the information above can be recorded while the page loads, we execute an action or we scroll. Then we can zoom in on a part of the graphic to see it in detail and, if, as in this case, what takes the longest is the execution of JavaScript, we can display the Main section and click on the scripts that take the long est time. In the Bottom-Up tab, the tool will show us in detail how the JavaScript is affecting each phase of the critical representation path and in the Summary tab it will indicate with a warning if it has detected a performance problem in the JavaScript. Clicking on the file will take you to the line that produces the delay.

Finally, for even finer analysis, it is advisable to use the JavaScript Navigation Timing API that allows us to measure in detail how long each part of our code takes from the programming itself.

Final recommendations

As you can see, JavaScript optimization is not an easy task and involves a laborious analysis and optimization process that can easily exceed the development budget we had initially planned. Therefore, there are many of the most famous websites, plugins and themes for the most common content management systems that have many of the problems I have listed.

If your website presents these problems, try to solve the ones that have the greatest impact on performance first and always make sure that the optimizations do not affect the maintainability and quality of the code. That is why I do not recommend the use of more extreme optimization techniques, such as removing function calls by replacing them with the code they call, unrolling loops, or using the same variable for everything, so that it loads from cache or from the processor registers, as these are techniques that mess up the code and, in JavaScript runtime compilation, some of them are already applied. So remember:

Performance is not a requirement that should ever take precedence over the ease of detecting bugs and adding functionality.