Written by Ramón Saquete

Index

The technological evolution of web development allows faster and faster implementations. However, websites are becoming slower and slower, due to development frameworks and libraries with a greater weight and designs that tend to seek greater visual impact, often without taking into account the consequences on the WPO.

Let’s see how all this is affecting the web ecosystem and what we can do to remedy it and significantly improve WPO.

The importance of image sizing, CSS and JavaScript

When we talk about static resources, we mean images, CSS, JavaScript and fonts. And it is their ever-increasing size that makes websites slower and slower. This is not a personal assessment, but the extrapolated conclusion from the CrUX (Google Chrome User Experience) data. For those of you who do not know it, it is a database with the performance data that Google obtains from users through their browser and that it publishes openly every month. Let’s see some graphs from the source(https://httparchive.org/reports) in which this data has been used, along with historical data from Internet Archive (Wayback Machine’s open database):

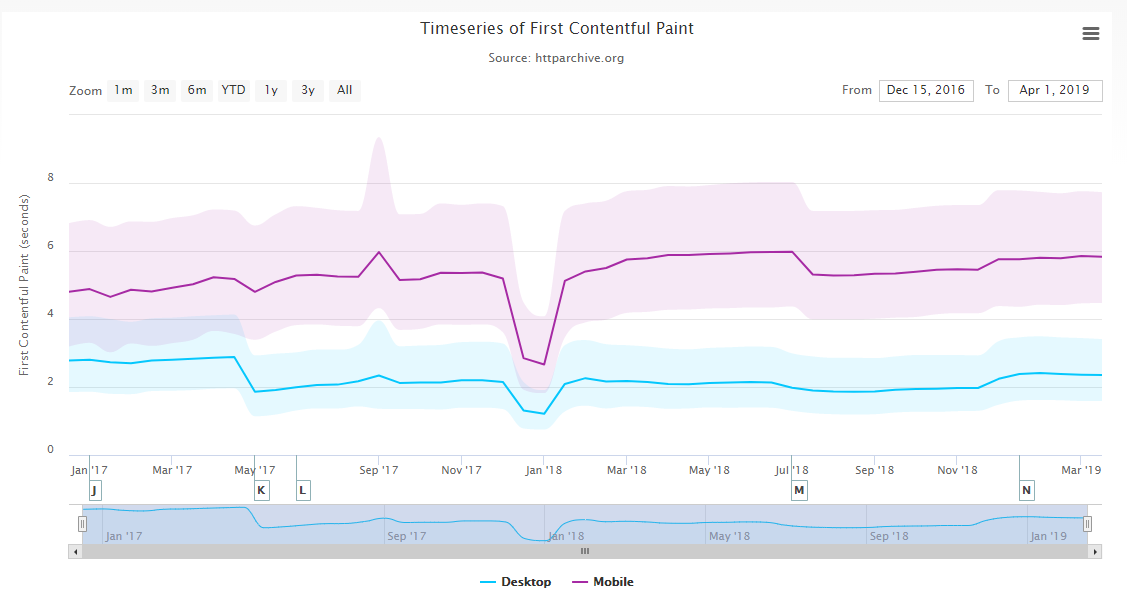

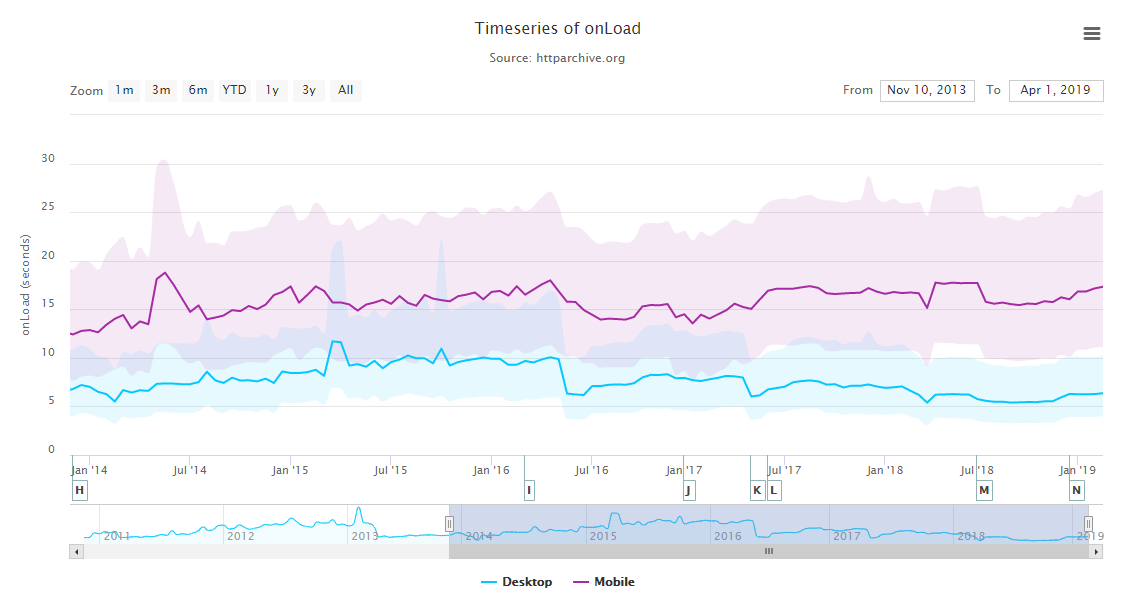

Here we see how some metrics such as FCP (First Contentful Paint) and total load time have gradually increased in recent years on mobile:

On the other hand, on desktop the loading time has decreased, probably due to the improvement in transfer speeds.

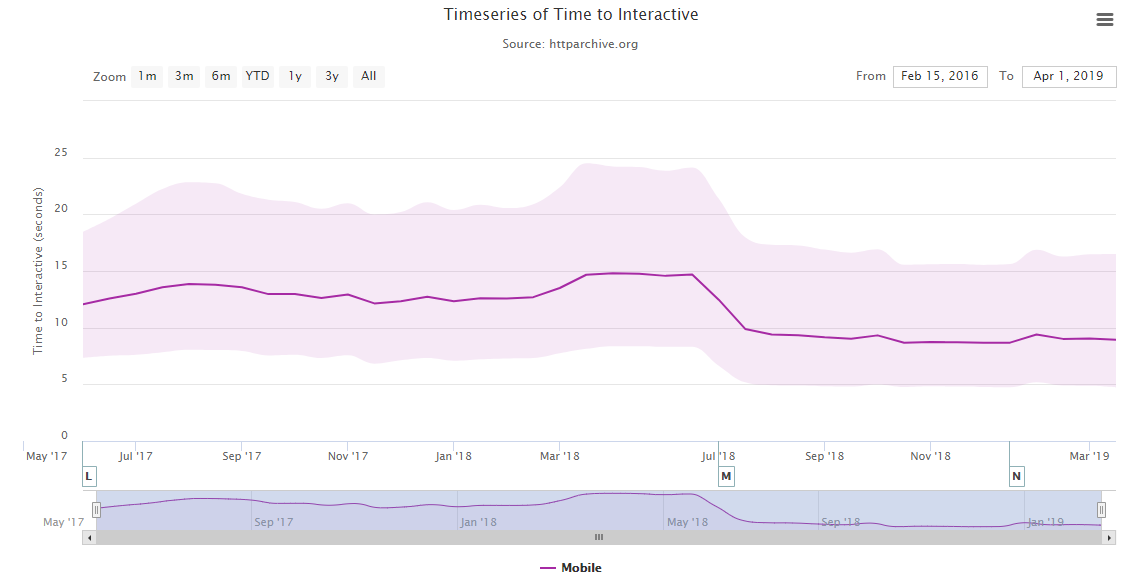

Even if pages take longer to display and download on mobile, the time it takes for the content to become functional, i.e, TTI has improved slightly. However, as we will see below, this improvement cannot be said to be due to more optimized pages, so it is probably due to browser optimizations and more powerful CPUs:

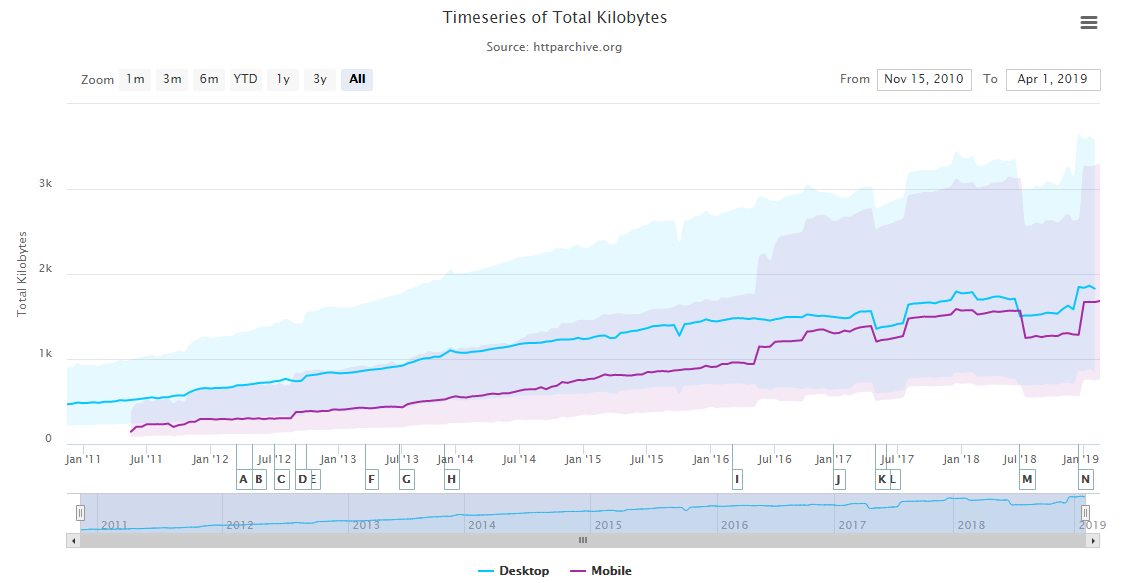

To verify what I mentioned in the previous paragraph, in the following graph we have the evolution of the total weight of the pages (on the Y-axis, 1k means 1000 Kilobytes) and we see that the average size of Web pages is reaching 2MB and, although it is true that we have more and more higher and more reliable transmission speedsThanks to technologies such as 4G LTE or the upcoming 5G, we should not count on it, since we will not always have the best coverage, nor will we have the optimal conditions to get close to the maximum transmission speed of the technology used.

We can also see that websites on mobile devices have become equal in weight to desktop versions, due to the implementation of responsive design since 2011.

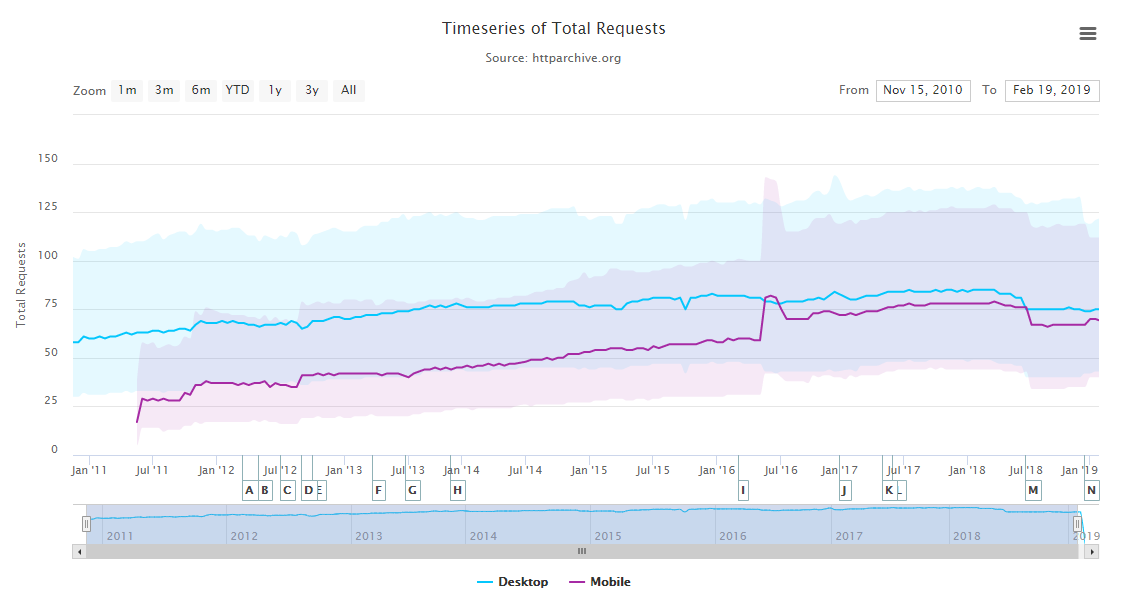

If we look at the following graph, where we have the number of requests, we note that it has increased to around 75, where it has stabilized. It has even decreased a bit afterwards, so the increase in size of the above graph does not imply a richer web, but the use of heavier resources such as: unoptimized development frameworks, loading different fonts to display four icons or full-width screen images and videos that do not provide relevant information to the user.

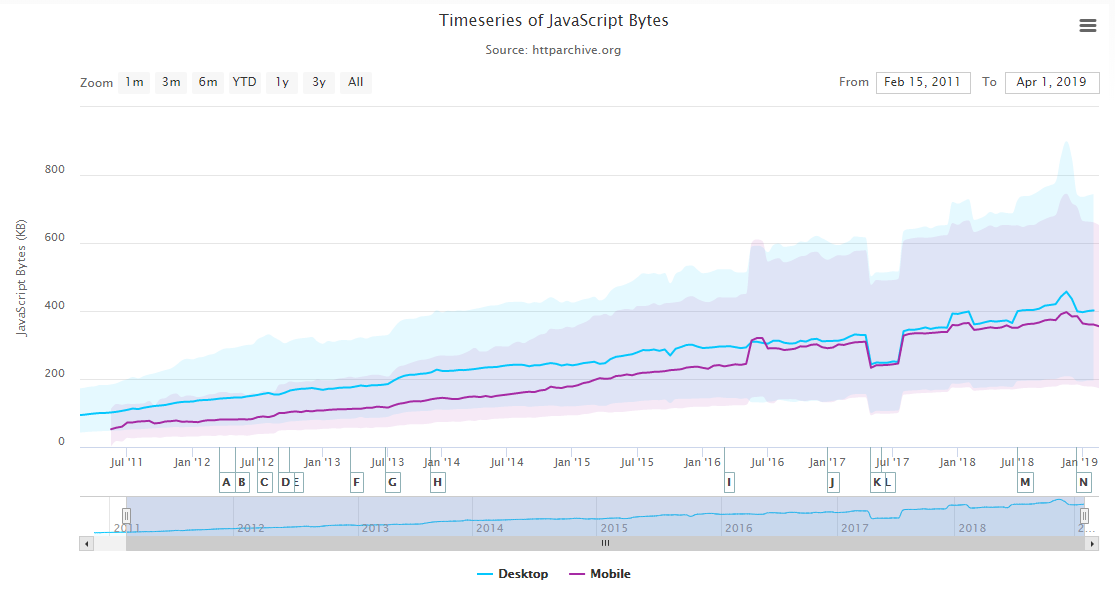

JavaScript size is an important factor, not only because of download time, but also because this code must be compiled and executed, using CPU and memory resources, which affects interaction and page-painting metrics. In the following graph we see that the average has reached 400KB, but it is not uncommon to find Web pages that far exceed this figure , reaching a megabyte or more, since, as we can see, the coefficient of variation in the results has also grown:

This increase in the size of JavaScript is probably due to the proliferation of frameworks that are able to offer more features, even if many of them are not used later. The problem comes when there is no budget to invest the necessary time to remove unnecessary code or delay its loading.

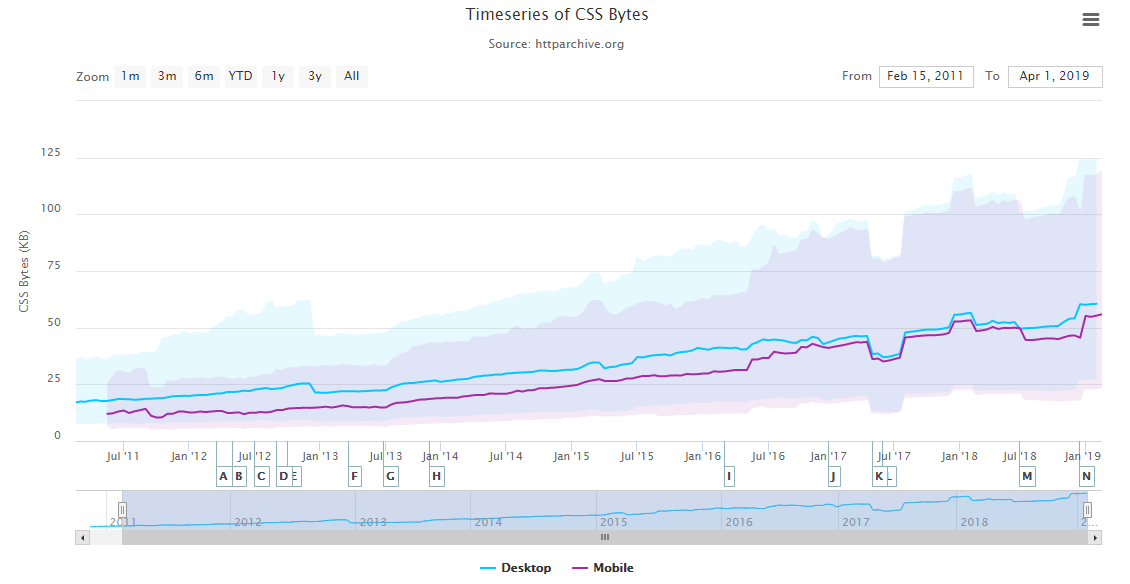

CSS frameworks are also problematic, as a framework like Bootstrap takes 150 KB (about 20KB compressed), which is 10 times more than the CSS a page normally needs to display a responsive design. It doesn’t seem like a lot to download, but it is, if we take into account that the FCP metric depends on the time to process that file:

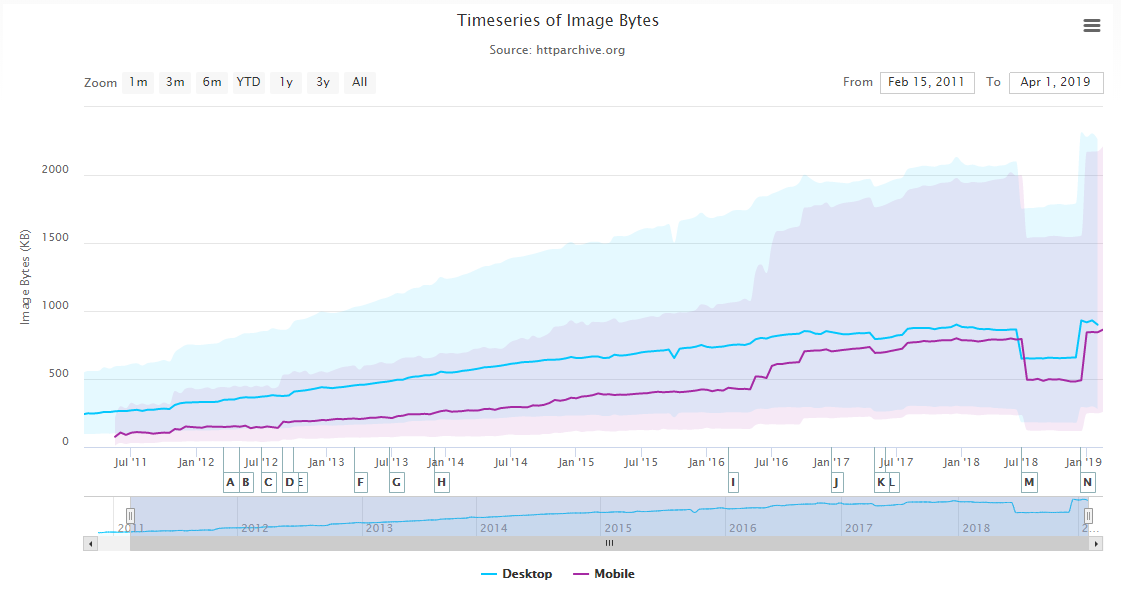

In the following graph, although it has an error in the data acquisition towards the end, we see that the size of the images also increases:

How to measure the weight of static resources

Smaller static resources do not necessarily mean better metrics: it also matters what the code we load does. However, there will always be a strong correlation between their weight and the metrics obtained in Google Page Speed Insights, affecting positioning.

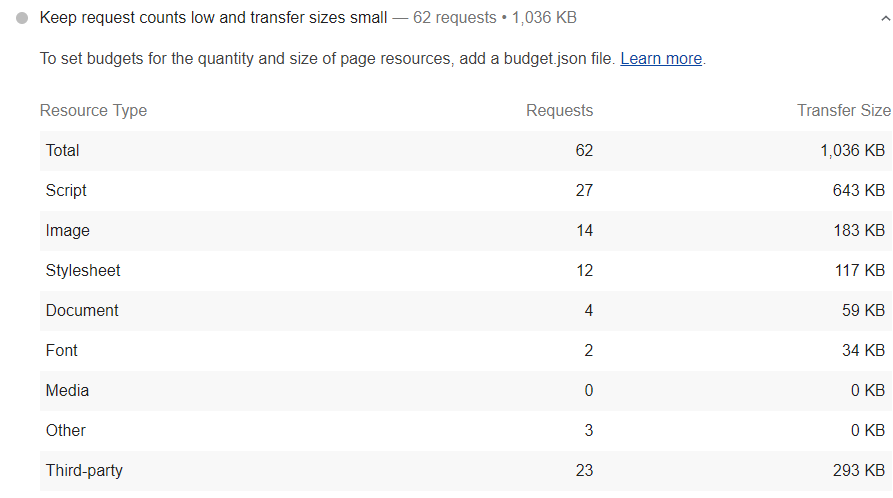

With the change in the design of Google Speed Insights, the version of the Lighthouse extension, from which this tool takes its data, has also been updated, adding a breakdown of what the resources of a website occupy, in the section“Keep requests counts low and transfer sizes small“:

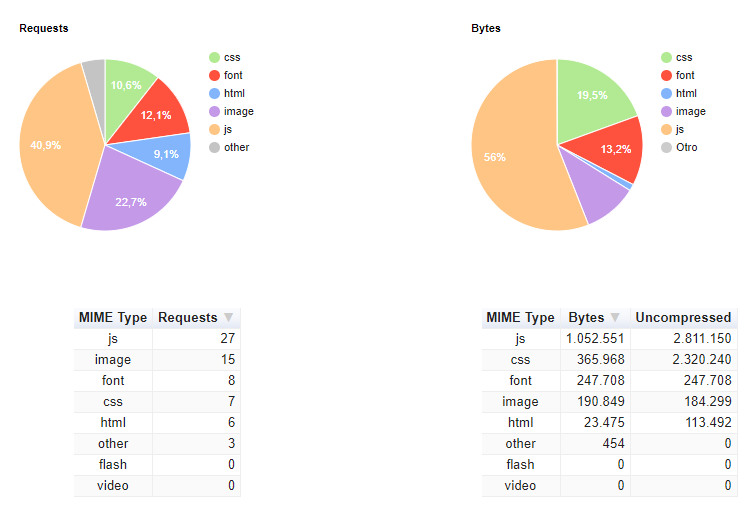

Another option is at webpagetest.org. By clicking on the“Content Breakdown” link after launching an analysis, we obtain the following breakdown:

Lighthouse, in its command line version, also allows us to assign a performance budget for static resources, that is, to define limits for what we want each type of resource to occupy in order to reach load time objectives.

On the web https://www.performancebudget.io/, we can estimate what our resources have to occupy, to load a page in a given time and in a specific type of connection. With the data obtained we can create a JSON file to define these limits in Lighthouse or simply to have it as a reference.

How to reduce the weight of static resources

In general, apart from eliminating everything that is not used, it is always advisable to critical resources shown in the following table. above the fold to paint what the user sees first, cache resources in the browser with cache headers and a service Worker and delay the loading of non-critical resources. This is what is known as the PRPL pattern (read purple – Preload RenderFast Pre-cacheand apply LazyLoad).

Another technique that can be applied to all resources to speed up the download, is a CDN to avoid high latency times, especially if our website is open to the public in several countries.

How to reduce the weight of CSS and JavaScript files

How to optimize CSS and JavaScript has already been explained above. But specifically, to reduce the weight, the ideal is to use a web bundler such as Webpack, Grunt or Gulptogether with their corresponding extensions, to remove unused portions of CSS, apply Tree Shaking to JavaScript (shaking the dependency tree consists of auto-detecting the code being used to discard unused code), identifying critical CSS, minimizing and versioning. If our website is made with a CMS like WordPress, Prestashop or similar, we can also find several plugins that perform these tasks with greater or lesser success, in the case of WordPress we have: LiteSpeed, WP-Rocket, Autopmize Critical CSS, …

For example, in webpack we have the plugins https://purgecss.com/ to remove unused CSS and https://github.com/anthonygore/html-critical-webpack-plugin to detect critical CSS. Using them requires some configuration and generating the site code in HTML or at least the main templates. But the initial setup effort is well worth the large performance gain that can be obtained. Its execution should be automated as much as possible, so as not to slow down the workflow, and the time to be devoted to this part of the development environment preparation should be included in the initial budget.

How to reduce the weight of images

As for how to optimize the images, apart from everything we discussed at the time, some additional techniques would be:

- Apply the Lazy Loading optimization technique, which will soon be enabled by default in Chrome and will be configurable for each image with the loading attribute and the values “auto”, “eager” and “lazy”. The ideal right now is to detect if this functionality is active. Otherwise, with the JavaScript API, Intersection Observer (also if supported), implement the Lazy Loading. In the following URL, we have a good explanation of how to do this https://addyosmani.com/blog/lazy-loading/.Cuando apply this technique, we must always have the images within an attribute <noscript></noscript> to ensure that they will be indexed without problems, since Google does not always execute JavaScript.

- Use the WebP format of Google Chrome, for the images that we see that occupy less in this format. To load an image in several formats and let the browser choose the most suitable one, we can use the element <picture> and <source>. We could also use Microsoft Edge’s JPEG XR (.jxr) format and Safari’s JPEG 2000 (.jp2), although these browsers do not have as much market share as Chrome. Example:

<pre><picture>

<source srcset="/ejemplo.webp" type="image/webp" />

<source srcset="/ejemplo.jxr" type="image/vnd.ms-photo" />

<source srcset="/ejemplo.jp2" type="image/jp2" />

<img srcset="/ejemplo.png" alt="texto SEO" />

</picture>

</pre>

How to reduce the weight of fonts

We will devote a full article to font optimization. I will advance now that one of the best techniques to optimize font weight is to apply subsetting, which consists of eliminating unused characters from the font. To do this we can use an online tool like this https://everythingfonts.com/subsetter or an offline command line tool like https://github.com/fonttools/fonttools.

Also, of course, unused fonts and font variants should be removed.

Conclusion

When it comes to optimizing a page, historically, the most important thing has always been to HTML TTFBbut with the emergence of CSS and JavaScript frameworks and images that take up more and more space., the new user-oriented metrics and Google’s algorithm update called Speed Updatehas caused the TTFB to move to the same level of importance as the space occupied by static resources, as these directly affect page painting and interaction times. But don’t get me wrong: the TTFB is still very important, because unless we use HTTP/2 Server Push this time will determine when the rest of the resources start to be downloaded. What happens is that nowadays, in a web development, everything must be optimized.