Written by Ramón Saquete

Index

The Core Web Vitals are the new WPO metrics that Google will start to take into account from May 2021 as a web positioning factor. In addition, Google will use these metrics to display non-AMP pages in the “Top Stories” mobile functionality. Therefore, we must start now to prepare the websites for this new scenario.

First of all, ideally we should perform a complete WPO audit to find possible points of improvement in WPO, content, popularity and, above all, indexability. This last section is essential to be correct, in all aspects, so that it makes sense to invest time in the rest.

Core Web Vitals, at a basic level, can be understood more intuitively than others used in the past, but understanding them in depth and finding areas for improvement is equally technical.

However, in this article we are going to give some general information on how we can attack the improvement of these metrics and what they consist of.

In previous articles we explained what Core Web Vitals are and their importance in the user experience and how Google shows them in Google Search Console. But let’s analyze Core Web Vitals technically and in detail, to better understand how we can improve them.

Technical definition of Core Web Vitals

FID – First Input Delay

This is the time it takes for the website to respond to the user’s first click.

It doesn’t matter that the click is not going to have any response or that it is not on an element that executes JavaScript. The first click is the one that counts and the page will not respond immediately if JavaScript is currently running. Even in the case that it is not executing code related to the user’s click, since while executing JavaScript code, the interface is blocked. because it runs on the same thread.

Generally, the FID is directly affected by the TBT (Total Blocking Time) metric, which is the metric that tells us how long the interface is blocked, during the initial load, by heavy JavaScript tasks that last longer than 50 ms (on the running device).

Technically, TBT is defined as: the sum of the execution time of JavaScript tasks lasting more than 50ms from the time the first DOM element (FCP) is painted, until the browser goes 5 seconds without executing tasks longer than 50ms or performing two or more network requests. The time of each task added together is discounted by 50ms for each task.

However,JavaScript tasks that are executed from time to time on the page after loading (e.g., to reload advertisements) and whose duration exceeds 50 ms also negatively affect this metric.

LCP – Largest Contentful Paint

Time to paint the largest visible image, video or text on page load since the request for it is made.

The size does not take into account parts that are hidden or outside the viewing area. In addition:

- If it is an image, the size taken into account will be the size occupied by the image on the page, unless its actual intrinsic size is smaller, in which case the latter will be taken.

- If it is a text, the size considered will be the smallest rectangle that encompasses the text, without taking into account margins, padding or CSS borders.

The elements taken into account are all visible elements, regardless of whether they were created with JavaScript or not. And those deleted with JavaScript after loading the page will not be taken into account, since they are not visible.

CLS – Cumulative Layout Shift

Scoring of the repaints that the browser is forced to perform due to changes in size or position of elements, during the loading of the visible part of the page.

We can define it more formally as the sum of the scores assigned to each element that changes size or position within the visible area when the page loads. These scores are calculated as the percentage of the visible screen occupied by the element that has changed, multiplied by the percentage of the distance that has been moved.

Page elements may change size after the first painting for different reasons:

- When loading the font of the texts that have it assigned.

- When CSS styles (height, padding, margins, borders, …) are applied late. This occurs after the first painting because the loading of these styles is not advanced as it should be.

- When images are loaded under certain circumstances

- When the height or other CSS properties are changed by JavaScript.

- When JavaScript adds or removes elements.

How to measure Core Web Vitals correctly?

Before we look at how to improve the three metrics that make up the Core Web Vitals, we will look at how we should collect this data.

First of all, we have to keep in mind that LCP and CLS metrics depend on the following external conditions to the website:

- Size of the window or screen of the device on which the measurement is made.

- Device data transfer rate.

- Hardware resources available on the device to load the page, at the time of testing. At this point, the age of the device and what is happening in parallel at the time, whether inadvertently or not, will affect the user.

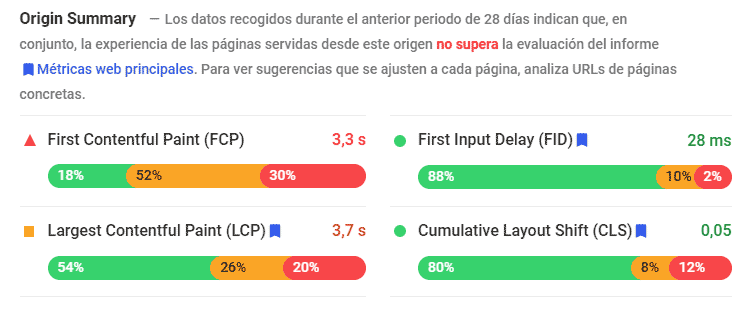

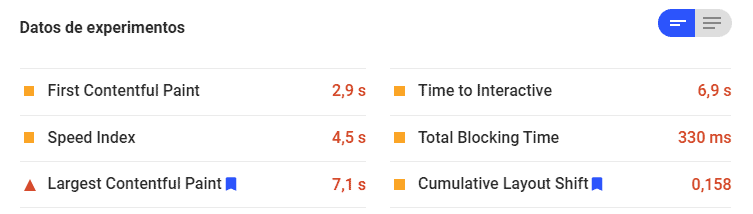

These two metrics are obtained when we launch a test in PageSpeed Insights but are also collected in the Google User Experience Report, based on data collected from users over the last 28 days.

Since window size varies according to the user’s mobile or desktop screen, the statistical value collected from users will be more reliable. than the value calculated by PageSpeed Insights at the time of the test, as it always uses the same window size and may therefore vary from the value obtained from users.

The FID metric does not depend on the window size, but may depend on the data transfer rate of the device and will always be influenced by the available hardware resources. In addition, this metric is the only Core Web Vital metric that cannot be measured directly on the page, as it is data that is collected from the user, being the first site they click on. Its value will depend on whether JavaScript is currently running or not, so we need a statistical value and not a value of a specific test. However, TBT is a measure that can be obtained directly from the page and is directly correlated with FID.

Taking into account all of the above, the most correct measurements available to us are those provided by the user data. But to find out which elements are affecting the metrics obtained by PageSpeed Insights, we will look at the “Diagnostics” section, the point “What is the impact of the PageSpeed Insights metrics”, “Rendering of the largest element with content” which will tell us the element on which the LCP has been measured; and the point “Avoid major design changes”, that will tell us the elements whose sum of scores have contributed to the CLS, ranked from the largest to the smallest contributor.

To get a broader view of how these metrics affect each screen size, I recommend the following Google Chrome extension, which will let us know which page elements contribute to the LCP and CLS metrics and see how they change when measured with different window sizes:

Core Web Vitals extension for Google Chrome

As for FID, the best way to break down what affects it is to look in the “Performance” tab of Google Chrome’s developer tools and look at the red warnings for tasks that take longer than 50ms to run. From this tool we can also view the LCP and highlight the element on which it has been calculated by clicking on it.

From the developer tools, we can also observe the elements that affect the CLS, if from the tool in “More tools” > “Rendering”, we check the option “Layout Shift Regions“. When you check this option and load a page, you will see the elements that change size highlighted.

How to optimize Core Web Vitals?

How to optimize the FID?

JavaScript tasks that take longer than 50ms should be avoided. To do this we can:

- Optimize the programming of JavaScript code.

If instead of optimizing the programming of the JavaScript code we cheat and load the JavaScript synchronously (embedded in the page or externally without the async or defer attributes), we can easily improve this metric, since the page will not be painted until it is interactive. In this way, the FID would be practically zero, but this will not help us because we will worsen the LCP metric. So the thing to do is asynchronous JavaScript and try to make it run in less than 50 ms. Thus, neither metric will be affected. - Remove tasks that are not required for the initial load.

For example, if we need to bind the validation events of a form in the footer, we can delay the execution of this code until the form enters the user’s viewing area. And the same applies to any other element that is not seen in the initial load.

If we use JavaScript modules, either with a library such as CommonJS, RequireJS, AMD or with a web bundler such as webpack or with the native functionalities of the browser, it will be easier to have well located the tasks that do not need to be executed in the initial load, to execute them later. For example, on this website, the back up arrow button at the bottom right is loaded in a separate module, only when the scroll bar is scrolled. - Take part of the JavaScript code to web workers.

Web workers run in a separate thread from the interface, with the disadvantage that they do not have access to the DOM. If our JavaScript has parts that can be resolved in a separate thread, we can optimize this way. - Remove unused JavaScript code.

Here it is recommended to use a web bundler that allows us to apply the tree shaking technique, it is very useful. - Do not implement CSR (Client Side Rendered) pages. In addition to making indexing more difficult, if we paint the page with JavaScript on the client instead of on the server, we will have very bad results in the FID. So in these cases we recommend adapting the implementation to a Universal JavaScript version or using a pre-renderer.

- If we have to retrieve information with AJAX, try not to do it with above the fold elements and delay the moment to do it with below the fold elements.

- Avoid loading unneeded polyfills in modern browsers.

How to optimize the LCP?

Depending on the element on which the LCP is calculated in each of the site templates, we will have to pay more attention to some points or others in the following list:

- The first thing is to optimize TTFB (Time To First Byte) by using caches, using a CDN, improving server code, optimizing database queries, server resources and service configuration.

- Use a service worker to cache the website or part of the website in the user’s browser and only request a new version when the content has been updated (cache-first policy).

- Advance critical CSS and delay non-critical CSS.

- JavaScript should always be loaded asynchronously, with the only exception being non-critical code. In any case, we should avoid using critical JavaScript that paints elements above the fold . But if it exists and there is no choice, we will embed it in the HTML.

- In relation to the previous point, we will avoid the CSR in the initial load, either in visible or non-visible elements.

- Remove unused CSS and JavaScript and use Brotli compression to optimize the download.

- Optimize the compression, format and available image sizes, especially if the image to be displayed is above the fold. Ideally we should specify the sizes attribute of the tag <img>, to indicate to the browser the percentage that the image occupies with respect to the viewport before loading the CSS. In the srcset attribute we will specify several image sizes, so that the browser can choose the most appropriate one.

- Avoid using the lazy load technique on images above the fold and use it on images below the fold.

How do cookie notices affect the LCP?

In this metric, the role played in the LCP by annoying cookie notices is a problematic issue.

If our cookie notice is the element detected as larger, simply by adding that it appears with an animation with window.requestAnimationFrame, it will no longer be considered as a candidate for larger content and the LCP will be measured on the element of the page that is actually larger. We can also prevent it from being taken into account if we make it appear after a few seconds (more annoying option) or by making it smaller.

Therefore, simply adding an animation or removing it can generate a significant change in our LCP score, depending on how this cookie notice is implemented and how many new visitors we have.

This raises several questions: Is it fair for a website to be penalized in the LCP, due to the cookie warning, when despite being loaded above the fold, it is a content that usually bothers users a lot and they will only see it on their first visit? Is it legitimate for a website to misrepresent the LCP by loading the cookie notice without animations?

Perhaps Google will correct this metric in the future. to disregard any cookie warning, or perhaps the legislators will realize how absurd it is to have a cookie warning on every website asking the same thing, when this can be configured globally from the browser. In any case, the correct way is optimize the LCP of the websites, both for the display of the cookie warning and in the event that it does not load.This will be the case for users who have accepted or rejected the notice, or who have a cookie warning blocker in their browser.

How to optimize CLS?

Mainly it is about optimizing CSS and avoiding CSR, paying special attention to:

- Advance critical CSS and delay non-critical CSS.

- Avoid changing the layout by JavaScript or adding or removing elements.

- Specify the width and height attributes of the images so that the browser can reserve the proportion of the height of the images. This technique only works in modern browsers (2020 versions) and when they are not images loaded with lazy load or with different proportions according to the screen size. For other situations and older browsers, it is preferable to reserve the proportion of the height of the images with the technique called CSS aspect ratio boxes. Iframes, videos and other blocks that change size during loading can also benefit from this technique.

- Optimize critical fonts mainly by using the font-display:swap property and preloading them with <link rel=”preload”>.

Conclusion

Optimizing Core Web Vitals is not easy. and requires carrying out practically all the optimizations that can be performed on a website, so it is advisable to analyze optimization needs in depth in order to tackle those parts that affect performance the most in the first place, focusing on how the changes will affect the set of metrics and users.