Written by Aurora Maciá

Last week I shared on LinkedIn an article I really enjoyed, originally published on Substack. It discussed what the author called the “compression culture,” referring to the growing trend of summarizing information — something now both demanded by users and delivered by almost every tool, platform, and app. She talked about valuing depth over speed, about the importance of process, consistency, and repetition in mastering any skill. I liked it so much that I shared it almost instantly on both my social networks.

Ironically, just a few days later I stumbled upon a post in which another Substack user accused the first one of plagiarism. In one of her articles, she had copied entire paragraphs verbatim, casting doubt on her authorship of the rest of her posts. Yes — someone who had been defending the importance of repeating something many times to achieve mastery had chosen to take a shortcut and copy others’ work. It’s still unclear whether her other articles contain plagiarism, but just in case, I deleted the posts where I had recommended hers. I blamed myself for not researching the author — a complete stranger to me. I guess the fact that she was among the Top Authors in Substack’s Technology category (when I shared the article, she was #8; at the time of writing this, she’s #1) gave me a false sense of security. As if any sort of blue checkmark from a social network was beyond question. As if certain platforms didn’t reward virality over integrity.

Digital exhaustion

I joined Substack — like I imagine many others did — to get away from microblogging and have the chance to read full arguments, original reflections, and nuanced thinking. To discover good writers. And luckily, I have. But I’ve also noticed that, like every other platform, Substack opens its doors to plagiarism, uncontrolled AI use (already nicknamed AI slop), misinformation, and even hate speech. And I’m starting to feel a certain fatigue.

I’m not the only one.

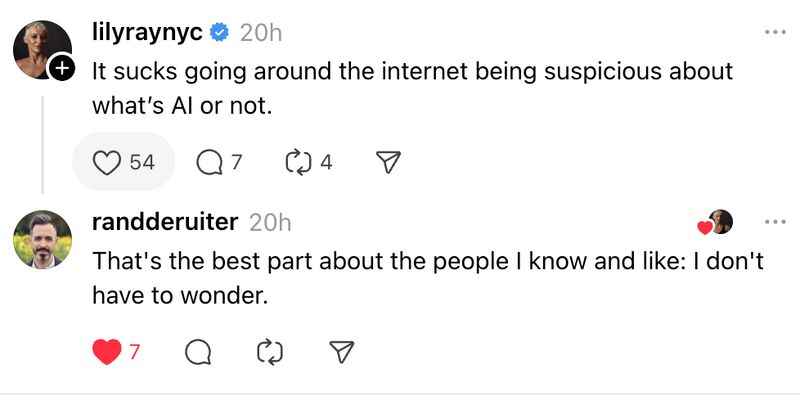

This post by Jay Acunzo shared the following exchange between Lily Ray and Rand Fishkin:

I think more and more people will start to feel like Lily or like me — tired of having to stay hyper-alert just to avoid swallowing fake news, synthetic images, copy-pastes, or made-up facts that sound real. We consume enormous amounts of information, and running it through so many filters all the time — while essential — is exhausting.

A new way to navigate

A few (rare) companies are offering to help, like DuckDuckGo, which announced a couple of weeks ago a new setting to hide AI-generated images from search results. However, tweaking these settings on every single platform doesn’t sound appealing either. I lean more toward Rand’s simple answer: if you know and trust the source, you don’t need as many barriers. And I think this will be the formula many of us choose to navigate the Internet in the era of infoxication.

Check the signature first, then the content. Go to what’s familiar and respected, while investigating further when something feels unfamiliar. Even if it doesn’t become a mainstream behavior, it would be the most responsible one. Otherwise, we risk enriching plagiarists, spreading fake news, and amplifying dangerous narratives backed by lies. As citizens of the web, we have the right to access quality information — but also the duty to protect the online environment.

What role will your project play in the coming years?

In this scenario, companies now face a crucial crossroads.

The first option is to chase (or keep chasing) fast growth through cheap, paraphrased, and sometimes even misleading content. This can work for a while — until a Google de-indexing turns the dream into a nightmare overnight. You also risk your brand being permanently tied to such content, damaging its authority and reputation. On top of that, agencies recommending these tactics often underestimate users’ discernment, assuming they’ll consume whatever’s put in front of them. Nobody genuinely wants to listen to a podcast with two synthetic voices agreeing with each other. Just because something can be done doesn’t mean it’s a good idea.

These tactics create the kind of environment described by the Dark forest of the web and Dead Internet theories. The web becomes a hostile territory filled with low-quality content and robots talking and feeding off each other.

If this becomes widespread, humans will retreat to the cozy web, meaning closed platforms like messaging apps. There, users will feel more comfortable to talk and share — but they’ll be out of your reach. To better understand these concepts, I recommend watching this talk by Maggie Appleton:

The second option is to take advantage of the need for trusted references and establish yourself as a reliable source. To offer the reassurance of deep research, recognizable authors, and verifiable facts. To follow an ethical code and respect your users. To offer guarantees and proof. Producing this kind of content isn’t easy or cheap. That’s why it shouldn’t be handed over freely to AIs unless they’re sending traffic and benefits back your way. Thanks to initiatives like Cloudflare’s Pay-Per-Crawl, it’s now possible to identify which bots are crawling your site so you can block those that exploit your content without contributing to your business. In this post, The Atlantic’s leaders explain how they’ve implemented it.

Choosing the second option requires greater investment and long-term vision. But the reward is that, in a sea of sameness, your name stands out. That in a world full of junk, you’re the diamond restoring an Internet user’s hope. That people want to share your content not only because they trust you but because they’re proud to associate themselves with a reputable source like you. That you lead the wave that uplifts and improves our digital ecosystem.

The choice is yours.

At Human Level, we design customized, ethical visibility strategies that drive business impact. Contact us and we’ll show you how to make sure you get found.