Written by Fernando Maciá

Index

The growing adoption of artificial intelligence platforms as search interfaces is radically transforming how users find information online and make purchasing decisions. If your company doesn’t appear in responses from Google AI Mode, ChatGPT, Gemini, or Perplexity, you’ll be less likely to be found by your potential customers.

Over the last quarter-century, search engine optimization—primarily on Google—has been the cornerstone of digital visibility. Companies invested significant resources to rank in top positions for their most popular searches, which translated into quality traffic and conversions. However, this paradigm is undergoing an unprecedented transformation within the Internet ecosystem.

The emergence of Large Language Models (LLMs) and AI-generated responses has created a new scenario where the question is no longer just “What position do I hold in Google results?” but rather, “Do I also appear in the answers my potential customers receive when they ask an AI?”

This shift carries profound strategic implications for any business that relies on organic customer acquisition. Let’s look at what they are.

The New Answer Ecosystem

One of the key changes is that Google’s monopoly as the hegemonic search engine has been broken. Users are now choosing between multiple search platforms based on their profile and needs, the type of query, and their personal preferences.

This ecosystem includes traditional search engines like Google and Bing (which now also incorporate AI layers like AI Overviews, AI Mode, or Copilot), conversational chatbots like ChatGPT, Claude, or Gemini, assistants integrated into productivity tools, search interfaces on social networks and forums, voice assistants like Siri or Alexa, and vertical search engines specialized in specific products or sectors.

The conversion funnel analogy, as a linear representation of a user’s progression through their purchasing decision process, is moving further and further away from reality. Consequently, the ability to correctly attribute each conversion to a single channel is being diluted.

And this is only the first of our problems.

Personalized Results

Another aspect that makes this new scenario particularly complex is that the answers we get from AI chatbots are personalized. That is, the same prompt will generate different results depending on the user’s profile, their conversation history, the documents and applications they have granted access to, and the context of the query itself. A purchasing manager, a maintenance engineer, and a sustainability lead, for example, would receive different recommendations even if they were to use a literally identical prompt with the same AI model.

Zero-Volume Searches

We also lack data on the popularity of each query in AI search engines: we don’t know how many people entered the same prompt in a given period. The conversational interaction inherent to these platforms invalidates the SEO methodology we have used until now to select the queries we wish to appear for.

The prompts users use on AI platforms are significantly longer and more specific than traditional keywords. While a typical Google search consists of an average of just over three words, prompts in ChatGPT average no less than 23 words, according to a Semrush study.

Position Is Not the Only Measure of Success

Finally, the position in which an AI result mentions or cites a website does not imply as clear a correlation with the ability to attract a visit (CTR) as one might expect. To begin with, in most cases, there will be no direct click from the result itself. And in those where there is, the probability of achieving it will depend more on being linked in the block most aligned with the user’s search intent than on the absolute position in the result. Let’s see why.

Results generated by AI platforms are usually presented with a thematically grouped structure. For example, if we enter the prompt “best video games” in ChatGPT, the result we get is structured into blocks:

- Best video games of all time.

- Best recent video games.

- Best RPGs.

- Best action/adventure games.

- Multiplayer/competitive games.

- Etc.

Faced with this type of categorization, the user is very likely to quickly scroll to the block most aligned with their initial intent (let’s assume the searcher persona in our example leans more toward action and adventure games) and focus their attention on that block. In this case, links in this section would have a much higher click-through probability than those included in the “best video games of all time” section, even if the latter appeared in a much higher position. In other words, in generative results, a higher position on the screen does not necessarily mean a higher CTR.

Strategic Blindness

All these factors combined create a situation of strategic blindness where we don’t know what is being searched for (unpredictable prompts), nor how many people are searching for it (we don’t know the search potential data for each prompt), nor if we are appearing (results are stochastic), nor for whom (results are personalized).

We must recognize, therefore, that at least part of the methodology we have been applying for search engine optimization is not directly transferable to the goal of appearing in AI responses.

If to rank on Google we had to study how users searched and how the algorithm worked, to appear in AI results we must also analyze how we use these platforms and the technology that makes them function.

How LLMs Actually Work

To design an effective strategy, it is essential to understand how LLMs process information. Language models “learn” by breaking text into basic units (tokens), analyzing the semantic relationships between them, and building vector representations of meaning (embeddings). The architects of these systems (called Transformers) process full sentences, paragraphs, or articles to better understand the meaning of each word in its context.

This contextual understanding means that LLMs do not look for exact keyword matches; instead, they understand the underlying meaning of queries. Therefore, semantic relevance and conceptual clarity are now more important than the keyword repetition that dominated the “lexical” era of search engines. (Here you can delve deeper into how large language models, or LLMs, work).

One of the main limitations of LLMs is that their knowledge of current events is limited by their training data. This is where RAG comes into play.

The Role of RAG (Retrieval-Augmented Generation)

Retrieval-Augmented Generation or RAG (Retrieval-Augmented Generation) is a key concept in the operation of platforms like ChatGPT or Gemini. It refers to a system that combines the base knowledge the model extracts from its training data with up-to-date information from external sources. When a user asks a question, the system searches for relevant information in real time to complement its response.

This has a direct implication for companies: your website, your PDFs, your technical documentation, customer testimonials, case studies, tutorials, etc., can be that source of fresh information that the AI uses to answer. If your content is inaccessible, confusing, poorly structured, or difficult to interpret, the AI will turn to your competition’s content. Your goal is to avoid that.

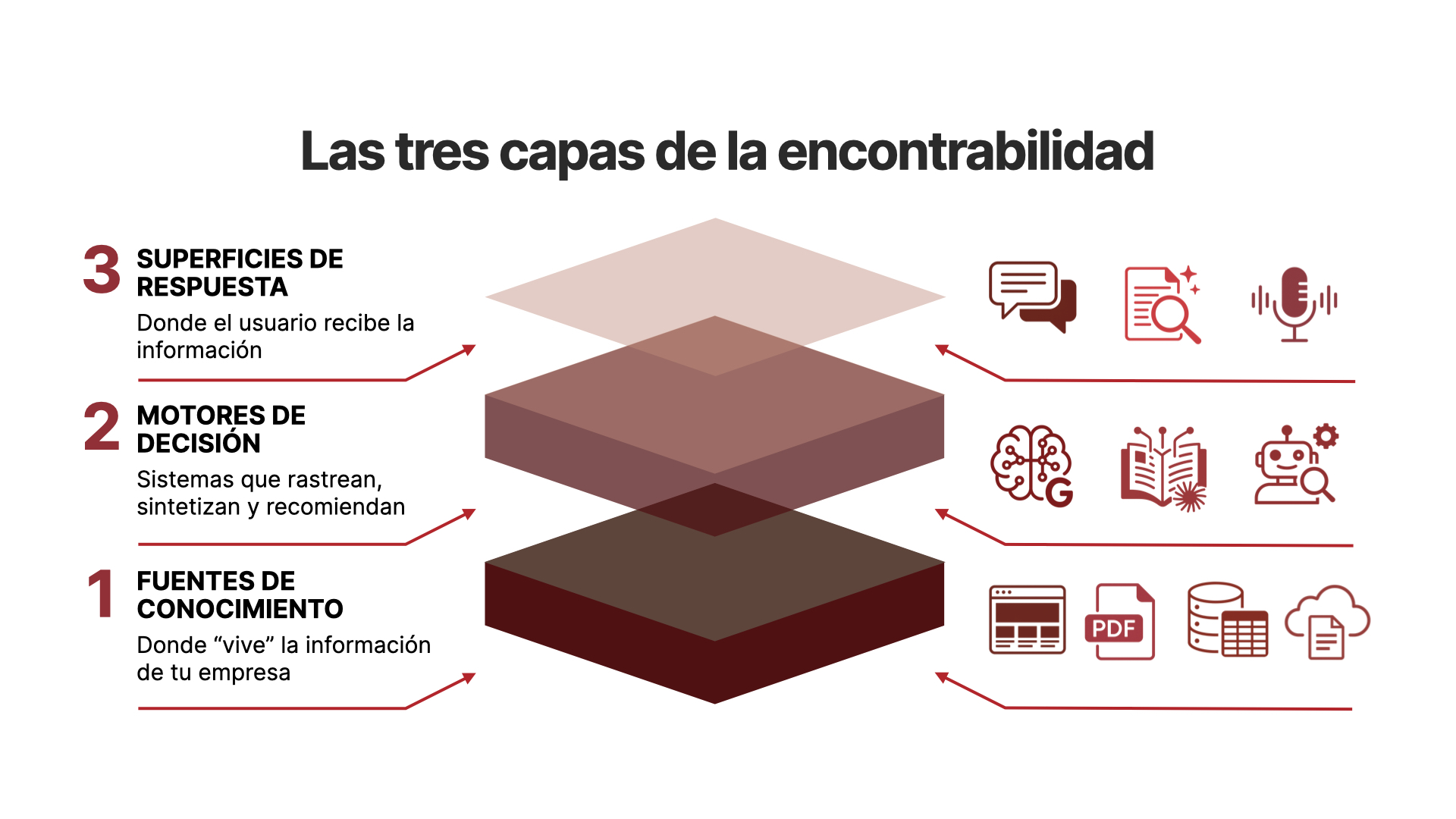

The Three Layers of Findability

To strategically approach this new scenario, it’s useful to conceptualize findability in three interconnected layers:

Layer 1: Knowledge Sources

This is where information about your company and your value proposition resides. It includes your corporate website, product sheets, technical documentation, FAQs, blog, catalogs, user manuals, and your content on third-party platforms such as social networks, comparison sites, industry directories, marketplaces…

The key question here is: where does your organization’s knowledge actually live? If it resides only in the heads of your senior technicians, in printed catalogs for trade shows, or in scattered or outdated PDFs on your website, you won’t be adequately feeding the AI ecosystem. This is where we have the greatest room for maneuver to improve a website’s findability.

Layer 2: Decision Engines

These are the systems that crawl information and recommend the best options to users: ChatGPT, Gemini, Claude, Perplexity, Copilot, and also the AI layers integrated into traditional search engines, like Google AI Overviews, Web Guides, or AI Mode. These engines collect and synthesize information from multiple sources, identify matching patterns to validate it, and transform it into personalized responses for different user profiles.

Layer 3: Response Surfaces

This is where the end user receives the information: chat interfaces of AI platforms, AI Overviews, AI Mode or Web Guides in Google, voice assistants, productivity tools with integrated AI… What all these responses have in common is that they satisfy the user’s query, often without the need for the user to visit your website.

Strategic Decisions for the CEO

Faced with this new paradigm, executives must take a proactive role regarding four fundamental aspects:

Knowledge Digitization

Where does your company’s knowledge live and how do you make it accessible so the AI can take it into account? This involves auditing the entire corporate knowledge base: identifying what information exists only in physical format or in employees’ memories, what documentation is scattered in inaccessible formats, and what technical knowledge hasn’t been formalized yet. It is the starting point to determine how much of what is currently published and accessible on our website is useful for AI-powered search engines.

By formalizing, structuring, and making information accessible, we build the foundation of our digital footprint.

Entity Building

Is your company a recognizable entity for AI systems? LLMs build knowledge graphs where entities (companies, products, people, concepts, etc.) relate to each other. Your goal is for your brand to be a clearly defined entity associated with the problems you solve, the sectors you serve, and the attributes that differentiate you as a company. Only then will you appear in queries related to them.

By identifying the entities we want our brand and products to be associated with, we align our digital identity with our corporate goals.

Reputation Management

What do others say about your company? AI tends to recommend what it perceives as the industry consensus. Mentions in specialized media, customer testimonials, published case studies, certifications and approvals, as well as online reviews and ratings, directly influence the likelihood of being recommended.

By controlling and feeding the information shared about our brand and products on third-party sites, we extend and validate our digital footprint to be identified as a recommended option by AI.

Governance and Processes

Finally, who decides what content is shared, at what level of detail, and how it is kept up to date? This decision is key because obsolete information that AI replicates, mentions, or links to can damage your reputation. Clear processes are needed to approve, publish, and maintain the corporate knowledge base we make available to AI.

By establishing processes to keep our information updated and aligned with our objectives, we ensure coherent communication.

A Practical Framework for AI Findability

And how do we put all this into practice? At Human Level, we suggest you check each of the points on the following roadmap:

- Visibility in sources: where does your company appear today? Evaluate your presence on your own website and how you appear via partners, in B2B catalogs, in industry media, and in professional associations. Is there more information you can make accessible?

- Entity clarity: is it clear what your company does? Define your products, the sectors you serve, the problems you solve, use cases, and your main competitive advantages. And be consistent when describing them both on your website and on third-party platforms.

- Knowledge depth: don’t just publish product sheets; publish complete technical guides, comparisons and analyses, answers to specific pain points for each profile involved in a purchasing decision, and industry best practices. Try to imagine the prompts where you would like to be part of the answer.

- Reputation and external signals: actively promote your presence in specialized media, your participation in associations, your contribution to technical publications, customer testimonials, and your certifications.

- Measurement beyond the click: it’s no longer enough to measure visits and positions; we must monitor if we are cited in AI responses and what share of voice we have compared to our competition.

The Findability Manifesto

To summarize, four principles should guide our strategy when AI becomes the primary recommender for our customers:

- Search is now conversation: users don’t type keywords; they ask complex questions and expect direct answers.

- Keywords are no longer the only starting point: entities, searcher personas, and brand will increasingly be the true focus of the strategy.

- Beyond the web: we must manage our entire digital footprint, including the publication of technical documentation, media presence, and facilitating and managing content related to our company on third-party platforms through a digital PR strategy.

- Human authority is the only quality filter: in a sea of artificial content, real experience, documented case studies, and industry reputation are the ultimate differentiators.

Findability is no longer just about showing up on Google. It’s about being a trusted source that these AI platforms turn to when they have to advise your potential customers. If the AI doesn’t recommend you, you won’t even make it to the first call.

This post is a summary of my presentation “Findability: How AI Redefines Online Search,” which I delivered in December 2025 at the AI Applied to the Web Forum organized by SPRI Enpresa Digitala and Mondragon Unibertsitatea:

Would you like us to study together how to apply our online findability methodology to your specific project? Dozens of clients are already taking advantage of it. Contact us so we can help you.“`