Written by Miguel Ángel Culiáñez

Index

What is the crawl budget?

The crawl budget could be defined as the amount of time Google spends crawling and indexing the content on a given website.

How is it measured?

There is a tendency to associate this volume of dedication with the time by Google’s crawlers or “spiders” (also known as bots) to carry out such tracing, although it should be borne in mind that this time as a unit of measurement will always have a translation in terms of consumption of energy resources. Therefore, this dedication could also be measured in monetary terms. Hence, the concept includes the term budget or budget within its denomination.

Some people prefer to associate the crawl budget with the extent of crawling performed by the spiders as a unit of measurement. That is, with the total number of pages that Google manages to crawl on each visit to the site. This is mainly because it is an open data that we can consult through our Google Search Console account. The time, energy and monetary investment made by Google in crawling our website, on the other hand, is something that only those in Mountain View know about. However, even in the hypothetical case of having this information, the total volume of indexed content is rather the consequence of having a higher or lower crawl budget. In other words, the more time, energy and money Google spends crawling a site, the higher the volume of pages crawled and, hopefully, indexed. Or, at least, more likely to do so.

In short, performing any activity requires time and energy, which translates into economic investment. It is necessary to keep this obviousness in mind to understand the root of the crawl budget concept, since crawling a website is not a free activity for Google. Let alone when it comes to crawling millions of websites a day. This is why the technology giant tries to optimize the resources dedicated to the crawling process and the amount of time allocated to this task for each website must be limited.

Why is the crawl budget important?

Having a high crawl budget means that the bots of the different search engines crawl our website more frequently, spending more time and analyzing a greater number of pages. This in turn translates into a greater chance that more of our pages and their corresponding content will be taken into consideration in response to the different search intentions of users and will rank higher in the search results.

In contrast, suffering from a low crawl budget worsens our chances of success in terms of SEO, by not having enough attention time for search engines to find and index our most recent content or end up doing so later than in the case of our rivals. It may also take longer to identify and take into consideration improvements or updates made to previously indexed pages.

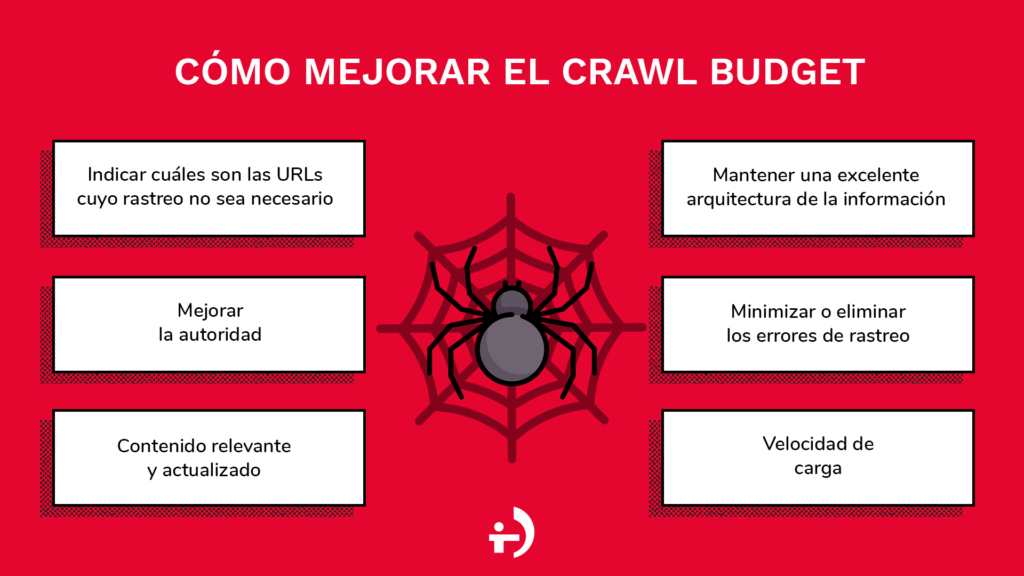

Six recommendations to improve your website’s crawl budget

At this point, the big question to ask ourselves is the following: what does it depend on, then, that our website enjoys a greater or lesser dedication from Google and the rest of the search engines?

In this sense, it is logical to think that, if we want Google and the rest of the search engines to devote more effort to crawling our website compared to those of our rivals, we must encourage them to do so and, of course, we must make things easy for them. Therefore, below, we will talk about some key aspects to consider in order to improve the crawl budget of your web project:

- Indicate which URLs do not need to be crawled: one of the basic actions we should take is to be considerate of the robots and respect their valuable crawling time. If we know that our website has pages that do not contribute anything in terms of SEO, it will be a real detail to update the robots.txt file in order to indicate to Google which URLs do not need to be crawled.

- Improve authority: page authority is one of the criteria used by Google spiders to determine the attention that should be devoted in its crawl, therefore, it is highly recommended in this regard to work in depth on aspects of linkbuilding and linkbaiting. A page that attracts the attention of many other quality portals related to our theme will also deserve special attention from Google. In this other article you will find very interesting tips on how to get quality links.

- Relevant and updated content: pages with quality and updated content are relevant pages and Google knows it. When a certain page has not been updated for some time, the interpretation of Google’s spiders is usually to consider it a less attractive page than others in the same page. site and, therefore, the tendency is to end up showing less interest and reduce or eliminate any tracking time on it. In other words, why would Google spend recurring efforts to crawl the content of a page to which we ourselves as site managers do not pay enough attention? It will therefore be necessary for key pages to be updated periodically. In connection with all of the above, implementing a evergreen content philosophy to keep only the most relevant information updated, but on an ongoing basis, will also help optimize your own time and effort (and not just Google’s), possibly achieving better results than if you based your content strategy on quantity versus quality.

- Maintain an excellent information architecture: it goes without saying that when it comes to finding, classifying and evaluating information, Google spiders apply essential principles. One of them is that the better the organization of information, the easier and faster this task will be. As obvious as it may seem, having a website with a brilliant information architecture will have a direct impact on the improvement of the crawl budget. In this regard, it is also worth remembering the importance of always keeping the sitemap file of our website up to date. If your web project consists of online sales and you wish to learn more about information architecture, you may also find it interesting to read this other article on information architecture in e-commerce.

- Minimize or eliminate crawling errors: it is highly advisable to work to keep our website free of errors (or at least keep them at very low levels) that could harm the work of search engines. We refer, for example, to broken internal links, the existence of 404 type errors generated by different causes and that do not yet have the appropriate redirection, pages with duplicate content, the presence of non-descriptive URLs, etc. In short, it is a matter of periodically reviewing our website and correcting all those on-page errors or points of improvement that we find to facilitate access and understanding of our content as much as possible.

- Loading speed: last but not least is the loading speed of our site. Once again, this is a key element not only to satisfy search engines, but also the user himself. Neither of them like having to wait to access the content we intend to offer them. The speed of loading will allow as much time as possible to be devoted to crawling by the bots so that they do not have to wait longer than is strictly necessary. If search engines come across a page that is excessively slow, the content of that page will most likely not be crawled and taken into account. When all the pages of the site or a large number of them have this difficulty, there are many aspects that can make a page or a website as a whole excessively slow. In fact, it would be advisable to understand the key elements of WPO (Web Performance Optimization) to fully assess the complexity of this issue, but we could mention an unsatisfactory hosting service or an excessive image weight as some common causes that are usually behind problems related to loading speed.