Written by Juan Pedro Catalá

Index

In my previous article, I analyzed how the loading speed of your web page affects SEO different measurement tools and the various factors that influence response time. In this article I will discuss the improvements that can be made in each of the steps of a request to your website to reduce the loading time of the same.

As I discussed in my previous article, a browser performs a series of steps to display a web page when a URL is entered into the browser. Here is an example of a user who wants to access our site www.humanlevel.com.

IP resolution given the domain

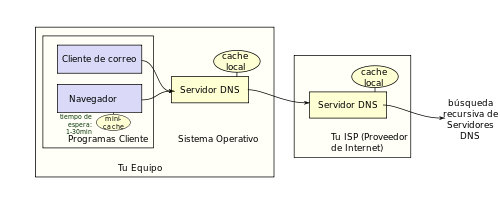

The first is to know the IP address of the server hosting the www.humanlevel.com web page in order to connect to it and download the page. To do this, the browser will launch a request to the DNS that the user has configured in his Internet connection and will return the IP of the server where the www.humanlevel.com website is located.

This request is only made the first time a domain is accessed.This is because it is subsequently cached in the browser, the operating system and the router for a period of time, so that the time of this request in the following requests to the same domain will be negligible since the IP will be obtained from the caches. Unfortunately as this is a process outside our server we cannot optimize anything in this step.

Browser connection to our web server

Once the browser knows the IP of our server, it will proceed to connect to it to request the page that the user has entered in the browser. This is the first step in which we can improve something, since the closer the server where our website is hosted is to the user who wants to see it, the less time the browser will take to connect to our server.

Therefore, if our target audience and most of our traffic comes from a specific country it would make the most sense to have our website hosted in that country, even if we have a lot of centralized traffic from a region within a country it would be best to find a hosting provider whose servers are in that region.

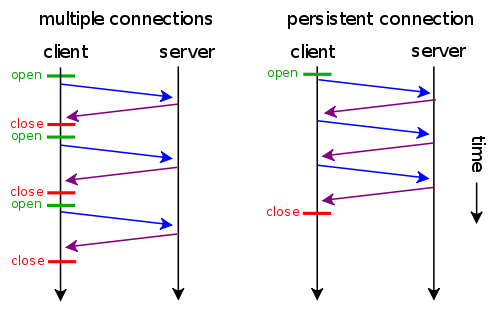

One of the things that we will have to enable in our server is to support persistent connections known as “Keep-Alive”. This will allow the browser to reuse the initial connection with our server and perform multiple resource requests (CSS style sheets, images, JavaScript, …) reusing the existing connection and avoiding wasting time and resources in connecting to the server again.

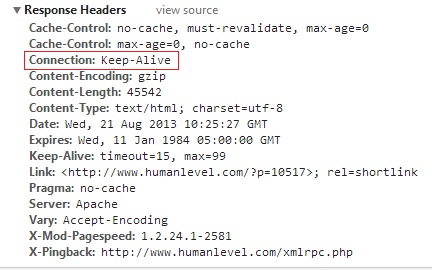

We can see if our server has “Keep-Alive” active by analyzing the server’s HTTP headers from Chrome’s developer tools (F12) if it responds with the Connection: Keep-Alive header.

Page processing on the server

Now that the visitor’s browser has already connected to the server where our web page is hosted, it has to process it to generate the HTML that it will send to the browser. To do this, the most common is to perform a series of queries to a database to obtain the information to be displayed. The fewer the number of database queries and the less time they take to execute, the faster the server will send the HTML to the browser.

One way to make fewer queries to the database is to cache the results for a period of time or until the cached value changes in the database. Another way to reduce response time is to group several queries in a single request to the database, thus saving time lost due to latency between the web server and the database server.

Depending on the programming of the page the server will send the HTML to the browser as it is painted or it will wait until the HTML of the page is completed to send it to the browser. Ideally, the beginning of the HTML up to the closing of the tag <head> should be sent as soon as possible.The browser will start downloading the CSS and JavaScript files in the header while the rest of the page is being generated on the server.

Receiving and processing the page in the browser

The browser reads the HTML of the page as it arrives from the server and requests external resources (CSS, JS, images, …) as it finds them up to a limit, since browsers have a maximum number of downloads that can be made at the same time from the same domain. This number varies according to the browser (Internet Explorer, Firefox, Chrome, …) and its version and ranges from 2 to 10 or more if the user has modified the browser settings, which is not very common.

To increase the number of simultaneous downloads, you can create static domains mirroring the main one to serve the external resources of the page.In this way, if for example we are in Internet Explorer 8 that only allows 6 simultaneous requests and we serve the web from www.humanlevel.com and the static contents from s1.humanlevel.com and s2.humanlevel.com we can have up to 18 downloads in parallel with the consequent improvement in the loading of the page.

To gain speed when serving static content, it is best to include within the <head> section of the page the pre-resolution of static domains, this will perform the DNS query asynchronously while the rest of the page is still being processed. To do this we would add the following code:

<link rel="dns-prefetch" href="//s1.humanlevel.com" /> <link rel="dns-prefetch" href="//s2.humanlevel.com" />

Serving static content from other domains also allows us to eliminate the cookies we use in programming, as these should only be assigned to the main domain in this example www.humanlevel.com. Care must be taken when creating static domains mirroring the main one, since only requests for static content must be passed to them or the appropriate redirections must be made to prevent the web from being indexed in the static domain. Because resolving the server IP of static domains has a temporary cost as I mentioned in the first step, I do not recommend having more than two or three static domains, unless it is to spread the load on a website with a huge traffic (in which case it would be better to use a CDN) or with a lot of graphic content.

Another option that should be activated in our web server is the GZIP compression in all text files (HTML, CSS, JS, XML, …), since it will reduce the download time drastically. To find out if your page has GZIP enabled, check if the “Content-Encoding” header with the value “gzip” is present in the response.

One thing to keep in mind is where the tags <script>, to load the JavaScript, and the tags <link>, to load the CSS, are placed in the HTML. The tags <link> should go inside the <head> as soon as possible and the tags <script> just before closing the label <body>. The resulting HTML would look something like this:

<!DOCTYPE html>

<html lang="es">

<head>

<meta charset="utf-8" />

<link rel="dns-prefetch" href="//s1.humanlevel.com" />

<meta name="viewport" content="width=device-width" />

<link rel="stylesheet" href="estilo.css" />

<title>Mi titulo</title>

... resto de metas e información ...

</head>

<body>

... contenido de mi página ...

<script src="script.js" async></script>

</body>

</html>As shown in the example, ideally a page should only download a CSS style sheet and a JavaScript file, as this saves server connections.

It is very important to place the tag <script> at the end of the <body>tag, since the moment the browser encounters a <script> stops processing the page and blocks the downloads of the rest of external resources, so that no matter how many simultaneous downloads we have achieved, if we do not place the tag <script> correctly will be of no use to us.

This problem of blocked downloads is solved with the new “async” attribute that has been added to the <script tag> in HTML5, since with this attribute we tell the browser to process the JavaScript asynchronously without blocking the rendering of the page. The problem is that older browsers (Internet Explorer <= 8 and older versions of Firefox) do not support it.

Despite the improvement that the async attribute represents, we must be careful when loading too many scripts (especially external scripts such as those of social networks) on the pages, since these scripts, despite being asynchronous, load an iframe with scripts that are not asynchronous, thus blocking downloads from our page. To solve this problem it is best to load all external scripts once our page is fully loaded, for this you can use the JavaScript window.onload event.

Once we have managed to optimize the downloads of external resources of the page and we have already downloaded them, the best thing to do is to avoid downloading them again on another page that also uses them. To do this, we must configure our web server to add the header “Cache-control: public, max-age=31536000” to the static resources. This header will allow the browser to cache all static files for a year, thus avoiding having to re-download all the files when you return to our website.

To conclude

In this article I have reviewed in a general way the process that a browser performs to load a web page and the improvements that we can make to speed up the speed of it in each of the steps. If you apply all of them you will see a substantial improvement in the loading time of your website.