Written by Aurora Maciá

Index

The past August 7, 2025, OpenAI announced the rollout of GPT-5. According to its own landing page, it is “the smartest, fastest, and most useful model to date, with built-in reasoning.” Users quickly voiced their opposition to this claim — and not without reason. The company had chosen GPT-5 as the model that would bring about The Great AI Revolution years ago, creating hype (in hindsight, excessive) and often talking about achieving AGI (Artificial General Intelligence). The day before the launch, Sam Altman tweeted the image of the Death Star from Star Wars appearing on the horizon, only to later clarify that it represents their competitors, while they (OpenAI) are the rebels.

Leaving aside the CEO’s curious marketing strategies, the truth is that most users reacted with disappointment. Expectations were high, and although GPT-5 surpasses its predecessor in some capabilities, the leap hasn’t seemed as big as the one from 3 to 4 — and it is still far from AGI.

A hostile welcome

Some users, accustomed to GPT-4’s friendly and enthusiastic voice, complained about the shorter, more concise answers GPT-5 now gives. They asked to be able to use the previous version again, since OpenAI had removed the option to choose which model would answer your query.

This is one of the main new features introduced by GPT-5: it chooses which model can best solve your request, switching from one to another when it deems necessary. For users most desperate to get their beloved GPT-4 back, OpenAI reconsidered and will allow them to bring it back if they pay.

Social media has been flooded with examples of wrong answers. For now, GPT-5 is unable to generate accurate maps and correctly write country or state names, to count how many letters are in a word, to determine how many fingers appear in a hand image, or to name and order the U.S. presidents chronologically.

“It’s like having a team of experts at your disposal for anything you want to know,” OpenAI says on its landing page. Seeing these errors, it might be better if another team of human experts double-checked. The company has stated that the model will get smarter over time, but Silicon Valley’s blind faith in the “move fast and break things” mantra seems to ignore that users’ trust is lost far faster than it is gained. This launch once again highlights the importance of being critical of big tech’s promises and the need to keep a cool head. Otherwise, we may end up riding bandwagons that don’t even have horses hitched.

After analyzing press releases and GPT-5’s actual capabilities, journalist Brian Merchant argues that it’s a model more aimed at businesses, so they can “mass-automate skilled, highly-trained, and creative work.” In fact, they’ve created a PDF file about the model specifically targeted at enterprises. Merchant also documents how achieving AGI no longer seems to be a short-term priority for OpenAI, according to some of Altman’s recent remarks.

Another aspect that has also been deprioritized is creating a model capable of storing massive amounts of information, so they can focus on improving reasoning capabilities instead. Why? Because it doesn’t need to contain all the information if it can access it whenever needed.

SEO stays in the ring

“Searching the Internet…”

This is the message that now often appears for a few seconds when you launch a query in ChatGPT-5. You no longer need to enable the “Search” function or write a specific prompt asking it to search the web: if the model believes a question is best answered by researching and gathering information online, it will do so automatically (it’s still possible to force a web search, as in previous models). It is more important than ever to present yourself as a reliable, well-structured, and up-to-date source of information if you want your content to be retrieved, understood, and cited by ChatGPT.

Better sources to fail less

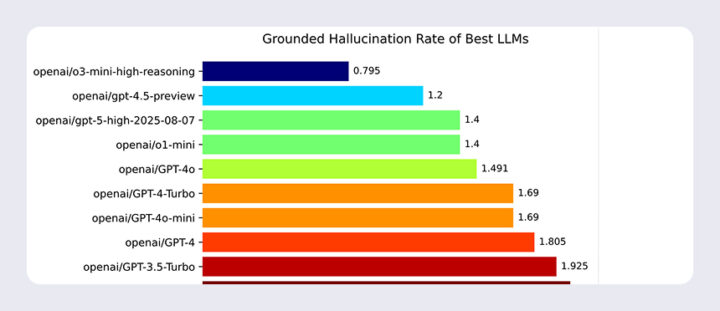

Even though the previous examples might suggest otherwise, according to OpenAI they’ve been working on reducing hallucinations and improving factual accuracy: “With web search enabled on anonymous prompts representative of ChatGPT production traffic, GPT-5’s answers are about 45% less likely to contain factual errors compared to GPT-4o.”

Vectara, a platform that publishes hallucination rankings, has found that GPT-5 has a grounded hallucination rate of 1.4% compared to GPT-4’s 1.8%, though model 4.5 was better (1.2%).

Although OpenAI doesn’t specify the criteria for selecting grounding sources, models will likely be increasingly careful and prioritize those with higher authority and trust signals (EEAT).

How GPT-5 cites its sources

We can assume that the way GPT-5 presents links to its sources will also evolve over time, but we wanted to take a look at the current state (as of August 2025) of how it cites external websites:

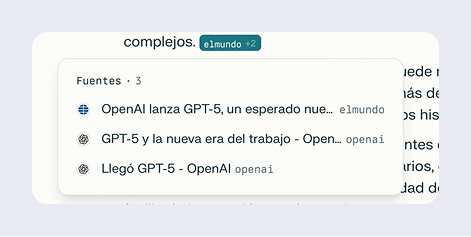

As with the “Search” function in earlier models, GPT-5 displays small clickable links next to the information, highlighting one of the many possible sources (the rest are hidden behind +n).

When clicking, you see a preview of the article’s title and intro as a source. You can also view the rest of the sources by clicking the arrows. Again, this was already the case in previous versions when SearchGPT was used. In both the link and pop-up cases, the prominence given to the first source compared to the others is striking. In other chatbots like Perplexity, it’s easier to access all the sources consulted.

Of course, you can still view all the sources at the end of the answer by clicking on “Sources,” which opens a sidebar with all the consulted sites. For some queries, it also shows a block of three highlighted contents. In this specific example, the three also appeared as the first sources in the links, once again gaining visibility.

Conclusion

GPT-5 introduces several improvements over its predecessors, but the expectations OpenAI created around its launch were overblown. However, the company’s strategic shift toward deep reasoning, moving away from massive information storage, has shed some light on the future of web content. Models will need high-quality information more than ever to ground their answers.

But will this ability to analyze and synthesize sources prove valuable enough for users?

If ads eventually get included in the answers, will ChatGPT’s interface still be virtually different from Google’s SERPs, or will they become increasingly alike?

It seems only time will tell.

If you’d like to know how to be cited as a reference, at Human Level we offer customized strategies for semantic content alignment, technical optimizations to improve information retrieval, and tools to monitor mentions. Contact us to discuss your project.